Operating System Concepts Book Notes

OS Introduction and Fundamentals

OS: Operating System

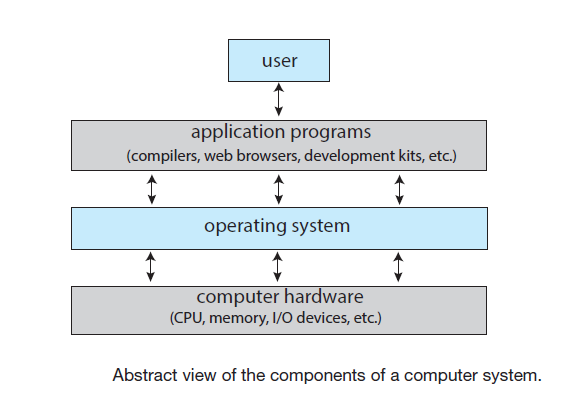

An OS acts as an intermediary between the computer user and the computer hardware.

Manages the execution of user programs to prevent errors and improper use of the system (Manages the computer hardware).

- Provides an environment in which a user can execute programs in a convenient and efficient manner

We can see the OS as a resource allocator (CPU time, memory space, file-storage space, I/O devices) to programs

- Decides how to allocate resources to specific programs and users so that it can operate the computer system efficiently and fairly.

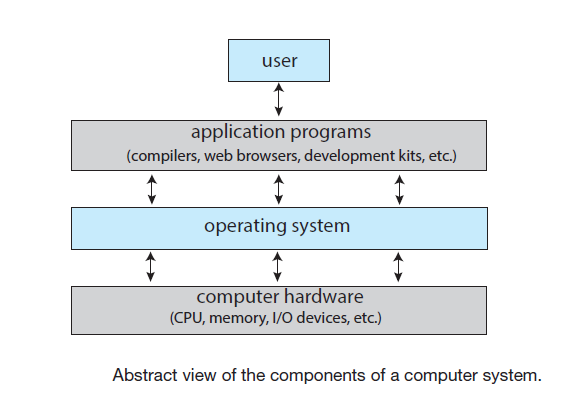

Computer system components:

- The Hardware: Provides the basic computing resources

- CPU, Memory and I/O devices

- The Application programs: Define the ways in which the HW resources are used to solve the user computing problems

For example: compilers, word processors, web browsers, etc.

- The OS: controls the hardware and coordinates its use among the various application programs for the various users

We can also view a computer system as consisting of hardware, software and data

An operating system performs no useful function by itself since it only provides an environment within which other programs can do useful work.

The OS must decide how to allocate resources to specific programs and users so that it can operate the computer system efficiently and fairly

The OS is a control program that manages the execution of user programs to prevent errors and improper use of the system.

Computers evolved into general-purpose, multifunction mainframes, and that’s when operating systems were born.

Operating systems exist because they offer a reasonable way to solve the problem of creating a usable computing system.

The fundamental goal of computer systems is to execute programs and to make it easy to solve user problems. Computer HW is constructed toward this goal. Since bare hardware alone is not particularly easy to use, application programs are developed. These programs require certain common operations, such as those controlling the I/O devices. The common functions of controlling and allocating resources are then brought together into one piece of software: the operating system.

For a general definition the operating system includes the always running kernel, middleware frameworks that ease application development and provide features, and system programs that aid in managing the system while it is running.

Computer-System Organization

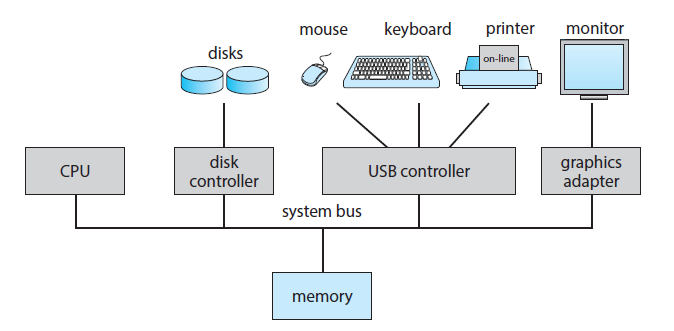

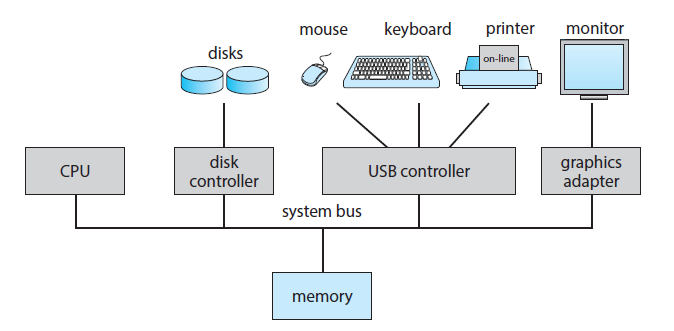

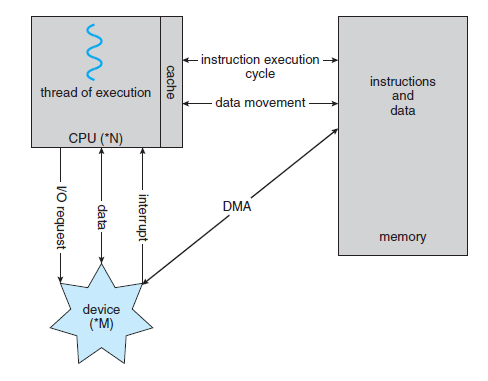

A general-purpose computer system consists of one or more CPUs and a number of device controllers connected through a common bus that provides access between components and shared memory

Each device controller is in charge of a specific type of peripheral device and is responsible for moving the data between the peripheral devices that it controls and its local buffer storage

- A device controller maintains some local buffer storage and a set of special-purpose registers.

Typically, operating systems have a device driver for each device controller which provides the rest of the operating system with a uniform interface to the device

The CPU and the device controllers can execute in parallel, competing for memory cycles

- To ensure orderly access to the shared memory, a memory controller synchronizes access to the memory.

Interrupts

Hardware may trigger an interrupt at any time by sending a signal to the CPU, usually by way of the system bus

- There may be many buses within a computer system, but the system bus is the main communications path between the major components.

When the CPU is interrupted, it stops what it is doing and immediately and must transfer control to the appropriate interrupt service routine this can be done by first calling so a generic routine to examine the interrupt information and then calls the interrupt-specific handler

A table of pointers to interrupt routines (interrupt vector) is also usually used and stored in low memory

The interrupt architecture must also save the state information of whatever was interrupted, so that it can restore this information after servicing the interrupt. If the interrupt routine needs to modify the processor state—for instance, by modifying register values—it must explicitly save the current state and then restore that state before returning as though the interrupt had not occurred.

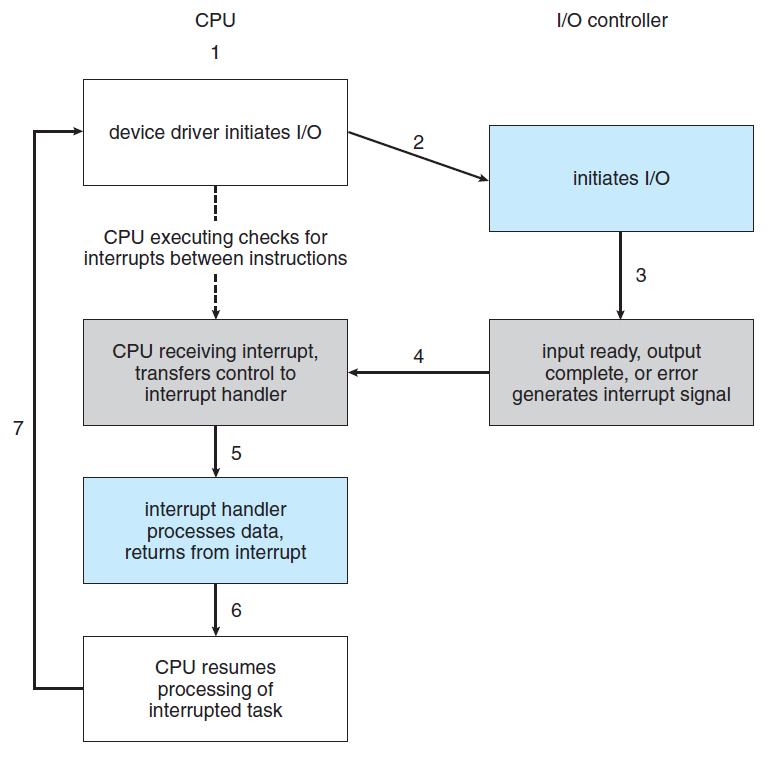

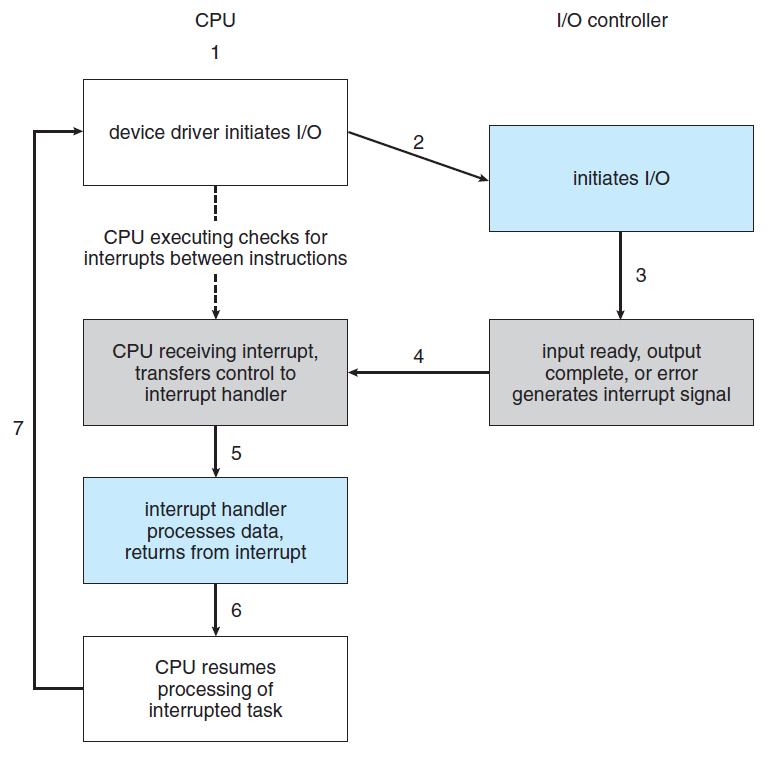

Basic interrupt mechanism implementation general flow for asynchronous events:

- The CPU HW senses an interrupt-request wire after executing every instruction.

- device controller raises an interrupt by asserting a signal on the interrupt request line

- The CPU detects or catches that a controller has asserted a signal on the interrupt-request line

- Reads the interrupt number, jumps to the interrupt-handler routine in the interrupt vector and starts executing the interrupt-handler which dispatches and clears the interrupt by servicing the device. This means doing the following:

- saves any state it will be changing during its operation

- performs the necessary processing

- performs a state restore, and executes a return from interrupt instruction

Modern systems usually have interrupt-controller hardware which provide more sophisticated interrupt handling features like:

- defer interrupt handling during critical processing.

- multilevel interrupts, so that the operating system can distinguish between high- and low-priority

Most CPUs have two interrupt request lines

- non-maskable interrupt: is reserved for events such as unrecoverable memory errors

- maskable interrupt:

- It can be turned off by the CPU before the execution of critical instruction sequences that must not be interrupted

- It is used by device controllers to request service

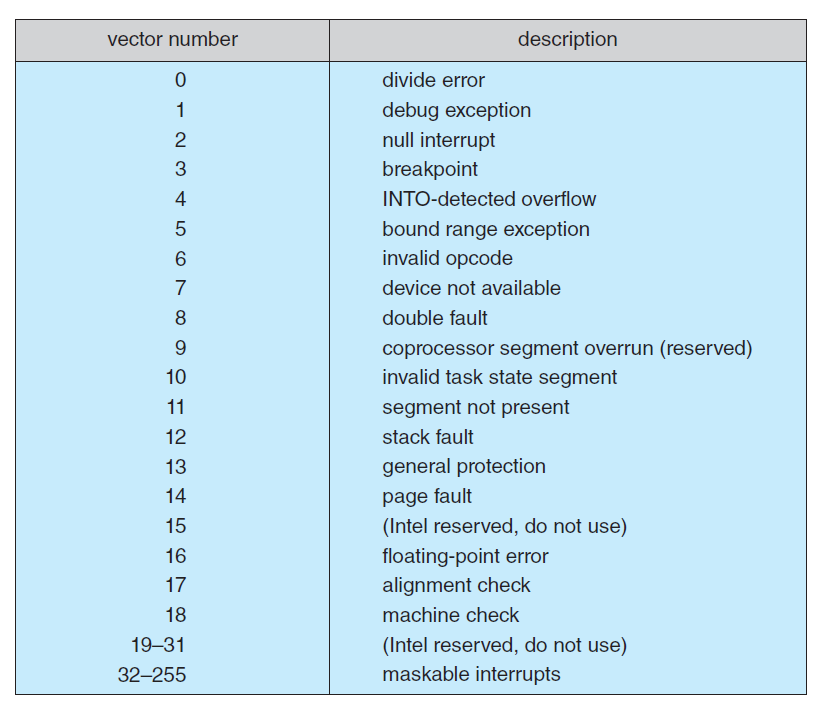

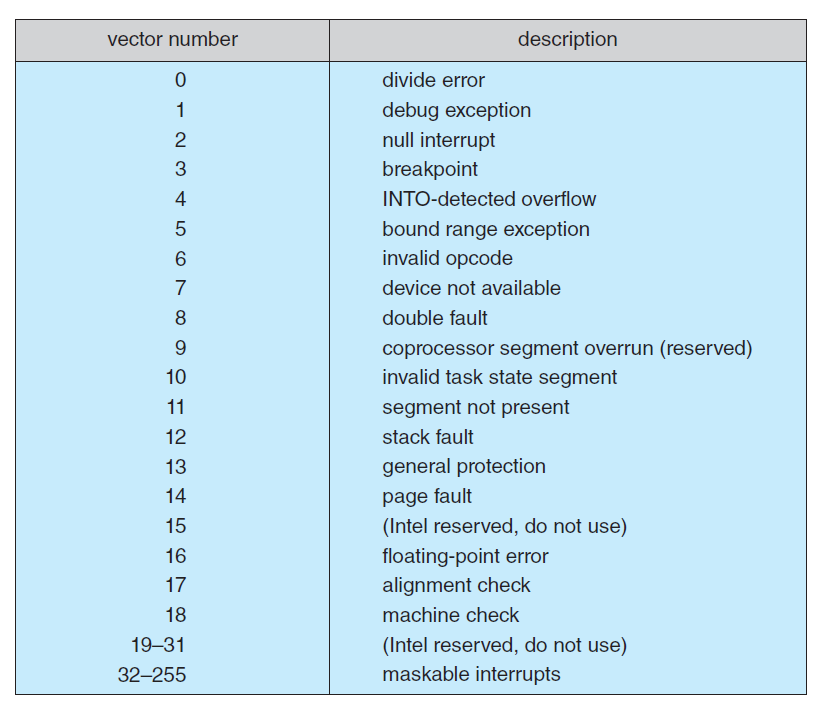

Vector table example where events from 0 to 31 are non-maskable, used to signal various error conditions, the events from 32 to 255 are maskable, used for purposes such as device-generated interrupts.

- The interrupt mechanism also implements a system of interrupt priority levels. this make it possible for a high-priority interrupt to preempt the execution of a low-priority interrupt.

interrupt-chaining

- It's a way of implemeting the way a service routine is called. Each element in the interrupt vector points to the head of a list of interrupt handlers. When an interrupt is raised, the handlers on the corresponding list are called one by one, until one is found that can service the request. This structure is a compromise between the overhead of a huge interrupt table and the inefficiency of dispatching to a single interrupt handler

Interrupts summary

- Interrupts are used to handle asynchronous events. Device controllers and hardware faults raise interrupts. To enable the most urgent work to be done first interrupt priorities are ussed. Because interrupts are used so heavily for time-sensitive processing, efficient interrupt handling is required for good system performance.

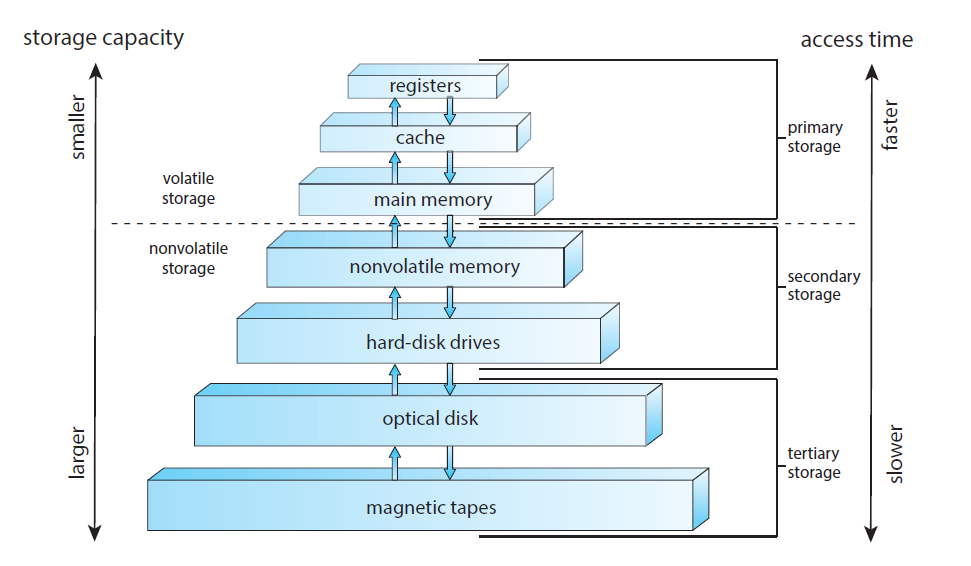

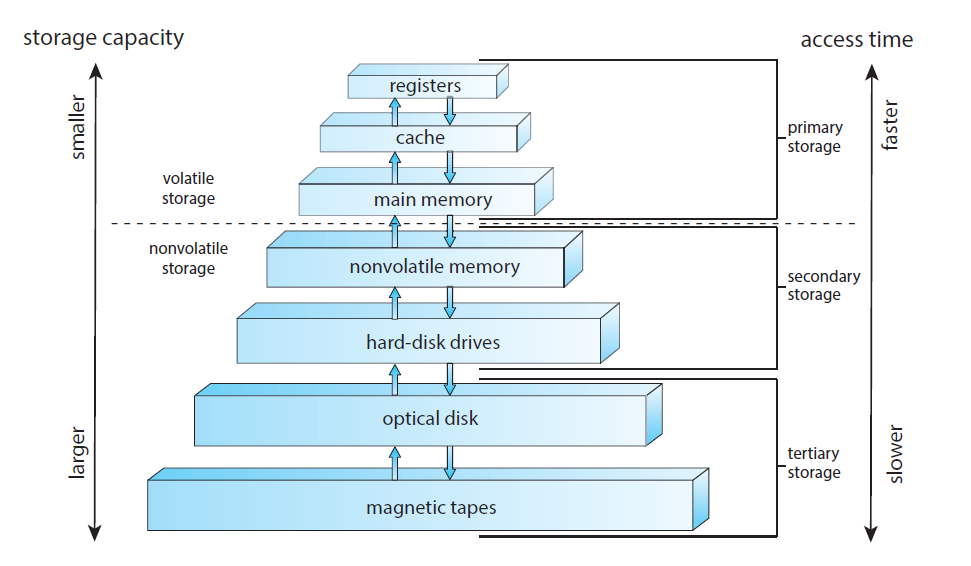

Storage

- The CPU can load instructions only from memory, so any programs must first be loaded into memory to run (main memory).

- the first program to run on computer power-on is a bootstrap program which then loads the operating system and this most be stored in non-volatile memory

- The load and store instructions are used to move date to/from main memory tofrom internal register within the CPU

- Due space and volatility problems in main memory (RAM) which is is small and non-volatile systems provide secondary storage as an extension of main memory but slower.

- The main requirement for secondary storage is that it be able to hold large quantities of data permanently like hard-disk drives (HDDs) and other nonvolatile memory (NVM) devices

- Most programs (system and application) are stored in secondary storage until they are loaded into memory. Many programs then use secondary storage as both the source and the destination of their processing

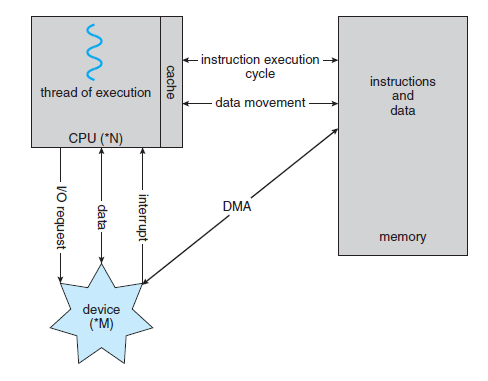

I/O Structure

A large portion of operating system code is dedicated to managing I/O,

Large data movement from I/O is done usisng direct memory access (DMA).

- Usually buffers, pointers, and counters need to be set up for the I/O device

- Then the device controller transfers an entire block of data directly to or from the device and main memory, with no intervention by the CPU

- Finally an interrupt is generated per block fo transferred, to tell the device driver that the operation has completed

Computer-System Architecture

- CPU: A term to refer to the hardware that executes instructions.

- Processor: A physical chip that contains one or more cores.

- Core: The basic computation unit of the CPU.

- Multicore: Including multiple computing cores on the same processor.

- Multiprocessor Including multiple processors.

Single-Processor Systems

Systems with one CPU with a single processing core.

- The core is the component that executes instructions and uses registers for storing data locally

- The one main CPU with its core is capable of executing a general-purpose instruction set, including instructions from processes.

These systems have other special-purpose processors as well, like the ones inside network and graphics controllers but all of these special-purpose processors run a limited instruction set and do not run processes

- For example, a disk-controller microprocessor receives a sequence of requests from the main CPU core and implements its own disk queue and scheduling algorithm. The OS cannot communicate with these processors, they do their jobs autonomously

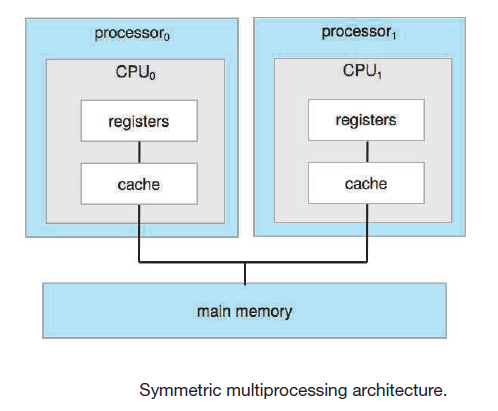

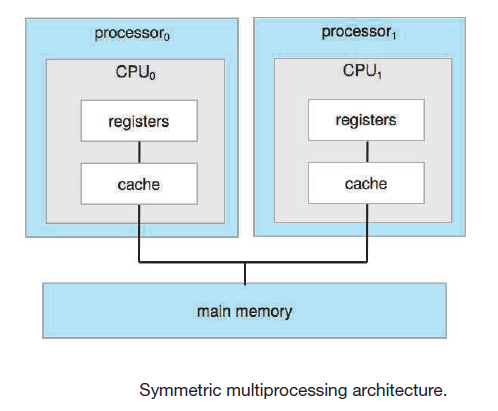

Multiprocessor Systems

- Systems that have two (or more) processors, each with a single-core CPU. The processors share the computer bus and sometimes the clock, memory, and peripheral devices.

- The primary advantage of multiprocessor systems is increased throughput

- When multiple processors cooperate overhead is incurred dues to keeping all the parts working correctly and due to contention for shared resources

Symmetric Multiprocessing (SMP)

- System in in which each CPU processor performs all tasks, including operating-system functions and user processes.

- Each CPU processor has its own set of registers, as well as a private or local cache but share physical memory over the system bus

N processes can run if there are N CPUs but the processors are separated so processes and resources such as memory cannot be shared dynamically among the various processors- Example of Multiprocessor system:

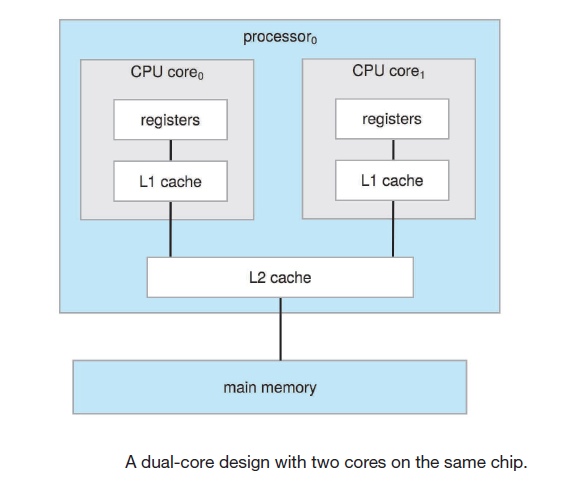

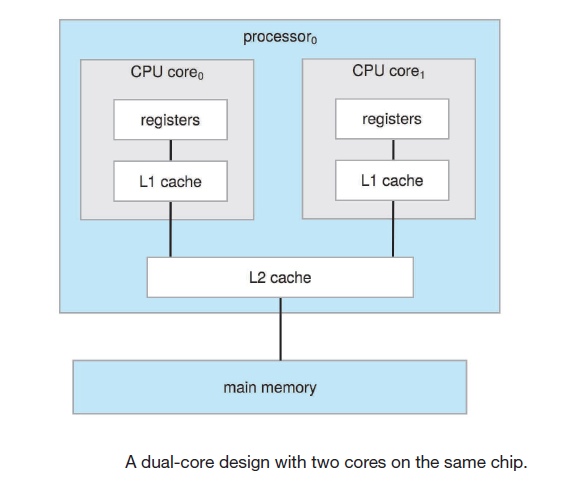

Multicore

- Refers to multiple computing cores reside on a single chip (processor).

- Currently multicore is also included in the definition of multiprocessor

- Multicore systems can be more efficient than multiple chips with single cores because of power consumption and cause on-chip communication is faster than between-chip communication.

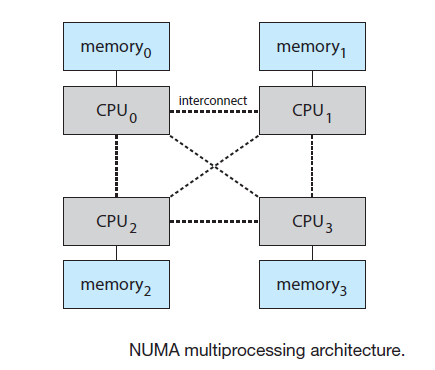

- They use local and shared caches,

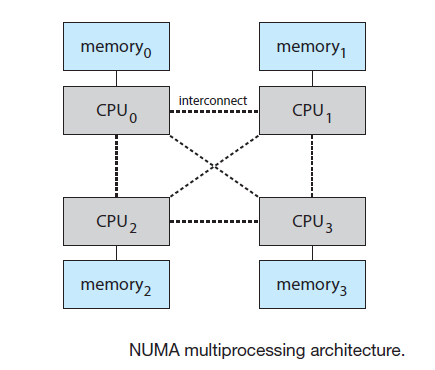

- Instead of providing each CPU with its own local memory that is accessed via a local bus. The CPUs are connected by a shared system interconnect, so that all CPUs share one physical address space

- unlike bus interconnection NUMA allows multiple memory references at the same time one CPU can access one moery module while other CPU is accessing other module

- They can scale to accommodate a large number of processors

- A potential drawback with a NUMA system is increased latency when a CPU must access resources across the system interconnect (E.g. CPU0 wants to access CPU3)

Clustered systems

- They are composed of two or more individual systems—or nodes—joined together; each node is typically a multicore system.

- The are usually used to provide high-availability ehich means a service that will continue even if one or more systems in the cluster fail

Operating-System Operations

The OS depends on a bootstrap program that initializes all aspects of the system, from CPU registers to device controllers to memory contents.

- The bootstrap program must know how to load the operating system and how to start executing that system. To accomplish this goal, the bootstrap program must locate the operating-system kernel and load it into memory.

Once the kernel is loaded and executing, it can start providing services to the system and its users.

Some services are provided outside of the kernel by system programs that are loaded into memory at boot time to become system daemons, which run the entire time the kernel is running

- For example On Linux, the first system program is

systemd and it starts many other daemons

Trap or exception is like an interrupt caused by an event/error internal to the processor, while general interrupts signal external events, exception are originally generated by the software which causes an internal CPU event like a zero division

The way the user app requests that an operating-system service be performed is by executing a special operation called a system call.

- When a system call is executed, it is typically treated by the hardware as a software interrupt.

Multiprogramming/Multitasking

One of the most important aspects of operating systems is the ability to run multiple programs (multiporgramming) since a single program cannot, in general, keep either the CPU or the I/O devices busy at all times.

In a multiprogrammed system, a program in execution is termed a process, the OS keeps multiple processes in memory simultaneously executes one and when the process may have to wait (dut to I/O or other task) the OS switches to another process

- As long as at least one process needs to execute, the CPU is never idle.

Multitasking is a logical extension of multiprogramming which is accomplished by switching between process frequently

Running multiple processes concurrently requires that their ability to affect one another be limited in all phases of the operating system, including

- process scheduling

- protect resources from inappropriate use

- disk, storage and memory management

- process synchronization and communication to avoid deadlocks

virtual memory is a technique that allows the execution of a process that is not completely in memory enables users to run programs that are larger than actual physical memory

- This abstracts main memory into a large, uniform array of storage, separating logical memory as viewed by the user from actual physical memory.

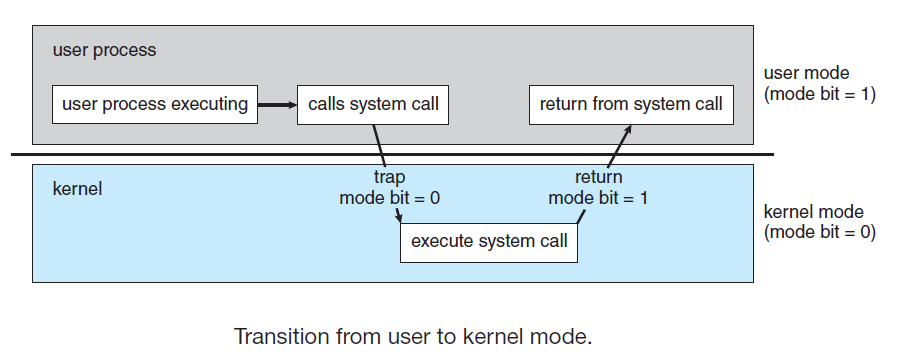

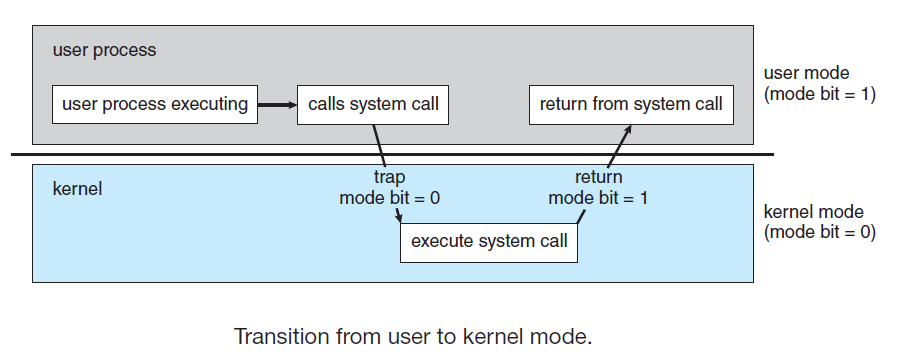

Dual-mode & Multimode

- The OS must ensure malicious programs dont cause cause other programs or the OS itself to execute incorrectly

- We must be able to distinguish between the execution of operating-system code and user-defined code

- Usually computer systems provide hardware support (the mode bit) that allows differentiation among various modes of execution, at least two modes user mode and kernel mode

- User application run in user mode and when it requests a service from the operating system (via a system call), the system must transition from user to kernel mode to fulfill the request

Timer

- Timers are used so the OS mantained control over the CPU

- They are used to prevent a user program from getting stuck and never returning control to the OS

jiffies is a kernel variable that represent the number of timer interrupts that have occurred since the system was booted.

- CPUs that support virtualization usually have a separate mode to indicate when the virtual machine manager (VMM) is in control of the system

- In this mode, the VMM may have more privileges than user processes but fewer than the kernel.

Resource manager

- The system’s CPU, memory space, file-storage space, and I/O devices are among the resources that the operating system must manage

Process Management

Memory Management

Main memory is a large array of bytes, a repository of quickly accessible data shared by the CPU and I/O devices.

The CPU reads instructions from main memory during the instruction-fetch cycle and both reads and writes data from main memory during the data-fetch cycle

the main memory is generally the only large storage device that the CPU is able to address and access directly, for the CPU to process data from disk, those data must first be transferred to main memory by CPU-generated I/O calls.

For a program to be executed, it must be mapped to absolute addresses and loaded into memory while executing it accesses program instructions and data from memory and when it terminates its memory space is declared available, and the next program can be loaded and executed

The OS is responsible for the following memory management tasks:

- Keeping track of which parts of memory are currently being used and which process is using them

- Allocating and deallocating memory space as needed

- Deciding which processes (or parts of processes) and data to move into and out of memory

File-System Management

- The operating system provides a uniform, logical view of information storage, abstracts the physical properties of its storage devices to define a logical storage unit

- The OS is responsible for the following File-System management tasks:

- Creating and deleting files

- Creating and deleting directories to organize files

- Supporting primitives for manipulating files and directories

- Mapping files onto mass storage

- Backing up files on stable (non-volatile) storage media

Mass-System Management

- The computer system must provide secondary storage to back up main memory.

- The OS is responsible for the following secondary storage management tasks:

- Mounting and unmounting

- Free-space management

- Storage allocation

- Disk scheduling

- Partitioning

- Protection

Cache Management

- When we need a particular piece of information, we first check whether it is in the cache. If it is,we use the information directly from the cache If it is not, we use the information from the source, putting a copy in the cache

- The programmer (or compiler) implements the register allocation and register-replacement algorithms to decide which information to keep in registers and which to keep in main memory.

- Cache coherency refers to making sure that an update data in one cache is immediately reflected in all other caches where the data resides. it is usually a hardware issue

- The movement of information between levels of a storage hierarchy may be either explicit or implicit, depending on the hardware design and the controlling operating-system software

- Data transfer from cache to CPU and registers is usually a hardware function, no OS intervention

- Transfer of data from disk to memory is usually controlled by the OS.

- In a hierarchical storage structure, the same data may appear in different levels of the storage system

I/O System Management

- One of the purposes of an operating system is to hide the peculiarities of specific hardware devices from the user in UNIX this is done by the I/O subsystem which consists of:

- A memory-management component

- A general device-driver interface

- Drivers for specific hardware devices

- Only the device driver knows the peculiarities of the specific device to which it is assigned.

Security and Protection

- Memory-addressing hardware ensures that a process can execute only within its own address space

- Protection is any mechanism for controlling the access of processes or users to the computer system resources

- A protection-oriented system provides ameans to distinguish between authorized and unauthorized usage

- The job of Security is to defend a system from external and internal attacks like: viruses, worms, DoS Attacks, identity theft, and theft of service

- Protection and security require the system to be able to distinguish among all its users. So user User identifier (user ID) and Group Identifier

- The UID and GID are associated with all of the user’s processes and threads.

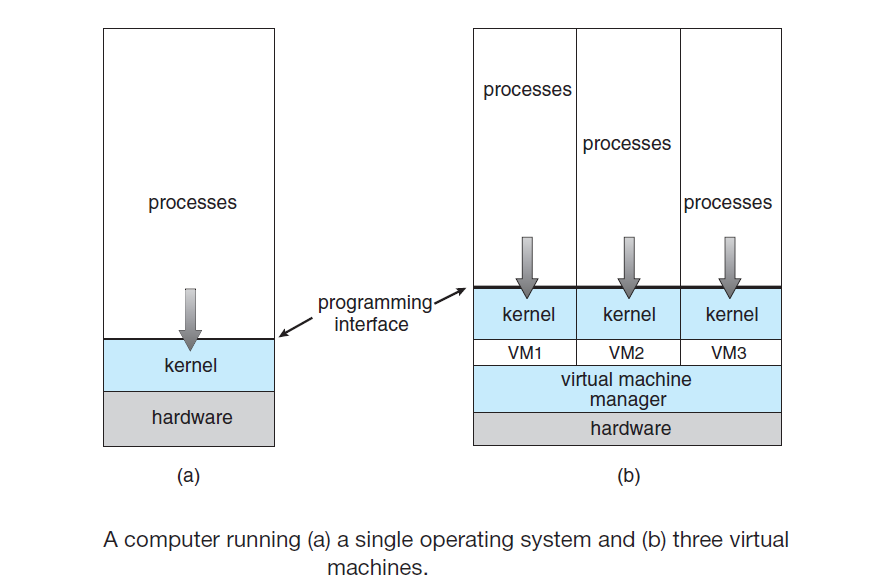

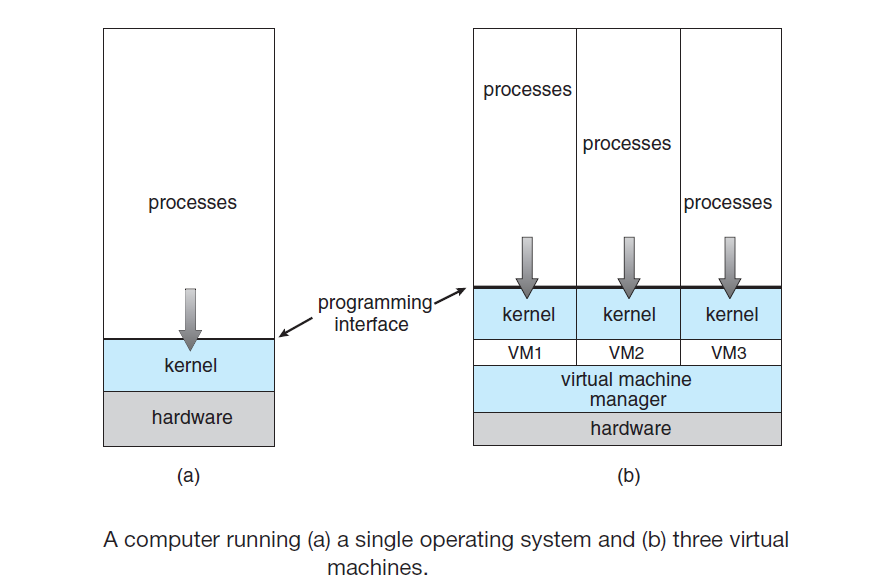

Virtualization

- Virtualization is a technology that allows us to abstract the hardware of a single computer (the CPU, memory, disk drives, network interface cards, and so forth) into several different execution environments, thereby creating the illusion that each separate environment is running on its own private computer.

- Virtualization allows operating systems to run as applications within other operating systems

- A user of a virtual machine can switch among the various operating systems in the same way a user can switch among the various processes running concurrently in a single operating system.

- Emulation involves simulating computer hardware in software

- The virtual machine manager (VMM) is the one that runs the guest operating systems, manages their resource use, and protects each guest from the others.

Distributed Systems

- A distributed system is a collection of physically separate computer systems that are networked to provide users with access to the various resources that the system maintains.

- Distributed systems depend on networking for their functionality

- A network operating system is an OS that provides features such as file sharing across the network, along with a communication scheme that allows different processes on different computers to exchange messages.

Kernel Data Structures

A list represents a collection of data values as a sequence that accommodate items of varying sizes and allow easy insertion and deletion of items the disadvantage is that the performance for any operation that requieres access to an element is linear (O(n))

The most common implementation of lists are:

- linked list

- singly linked list: each item points to its successor

- doubly linked list: each item points to its successor and predecessor

- circularly linked list: the last element in the list refers to the first element

- A stack is a sequentially ordered data structure that uses the last in, first out (LIFO) principle

- A queue is a sequentially ordered data structure that uses the first in, first out (FIFO) principle

A tree is a data structure that can be used to represent data hierarchically data is linked through parent–child relationships

- general tree: unlimited number of children

- binary tree: a parent may have at most two children

- binary search tree: It's a binary tree where

left child <= right child

A hash function takes data as its input, performs a numeric operation on the data, and returns a numeric value which can then be used as an index into a table to retrieve/store data

- A hash map: maps/associates [key:value] pairs using a hash function, we apply the hash function to the key to obtain the value from the hash map

A bitmap is a string of n binary digits that can be used to represent the status of n items.

The data structures used in the Linux kernel:

- linked-list:

<linux/list.h>

- queue:

kfifo.c

- binary search tree (red-black tree):

<linux/rbtree.h>

Computing enviroments

file-server system provides a file-system interface where clients can create, update, read, and delete files.

compute-server system provides an interface to which a client can send a request, ikn response, the server sends results to the client

Peer-to-Peer (P2P) clients and servers are not distinguished from one another. Instead, all nodes within the system are considered peers, and each may act as either a client or a server, depending on whether it is requesting or providing a service.

- Eliminates the server bottleneck issue in server only systems

Cloud computing is a type of computing that delivers computing, storage, and even applications as a service across a network, it's a logical extension of virtualization, because it uses virtualization as a base for its functionality. Types

- Public/Private/Hybrid Cloud: cloud available via the Internet that can be available to anyone willing to pay (public) or for a company own use (private) or a combination of these

- Software as a service (SaaS) one or more applications (such as word processors or spreadsheets) available via the Internet

- Platform as a service (PaaS) a software stack ready for application use via the Internet (for example, a database server)

- Infrastructure as a service (IaaS) servers or storage available over the Internet (for example, storage available for making backup copies)

Real-Time Embedded Systems

- A real-time system is used when rigid time requirements have been placed on the operation of a processor or the flow of data and tend to have very specific tasks or used as a control device in a dedicated application

- A real-time system has well-defined, fixed time constraints. Processing must be done within the defined constraints and functions correctly only if it returns the correct result within its time constraints.

Free and Open Source Software & Operating Systems history

Free and Open-Source: Free Software not only makes source code available but also is licensed to allow no-cost use, redistribution, and modification. Open-source software does not necessarily offer such licensing.

Proprietary or Closed-Source: Software that is owned by an entity which restricts its use, and carefully protects its source code.

Apple's macOS comprises a hybrid approach. It contains an open-source kernel named Darwin but includes proprietary, closed-source components as well.

- Darwin is based on BSD UNIX ans since its opene source even Apple has to make that code available, in Apple OpenSource package name begin with

xnu

The GNU General Public License (GPL) is a common license under which free software is released. Fundamentally, the GPL requires that the source code be distributed with any binaries and that all copies (including modified versions) be released under the same GPL license

In 1991 the GNU operating system was nearly complete. The GNU Project had developed compilers, editors, utilities, libraries, etc.

However, the GNU kernel never became ready for prime time and in the same year Linus Torvalds released a UNIX-like kernel using the GNU compilers and tools;

the combination of these two thing resulted in the GNU/Linux operating system, the kernel properly called Linux but the

full operating system including GNU tools is called GNU/Linux this OS then was used to create hundreds of unique distributions, or custom builds, of the system.

- GNU/Linux is a free and open-source operating system

BSD UNIX is another UNIX based OS (like GNU/Llinux) which has a longer and more complicated history than Linux. It started in 1978 as a derivative of AT&T's UNIX and released an open-source version, 4.4BSD-lite, in 1994. There are many distributions of BSD UNIX (FreeBSD, NetBSD, OpenBSD, and DragonflyBSD.)

OS Structure

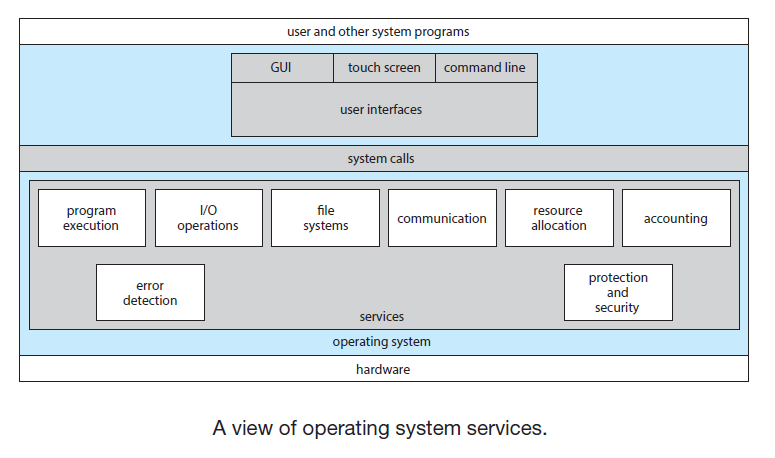

OS Services

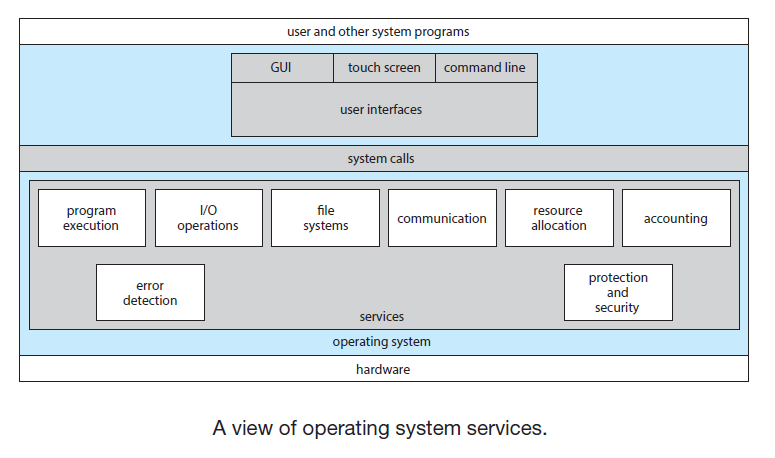

- An operating system provides an environment for the execution of programs. Provides services that are helpful to the user and to ensure efficient operation of the system:

- User interface: GUIs and CLIs

- Program Execution: to load a program into memory and to run that program.

- I/O Operations: The operating system must provide a means to do I/O since users usually cannot control I/O devices directly.

- File-System Manipulation:

- Process communication:

- Error detection: Detect errors to take the appropriate action to ensure correct and consistent computing.

- Resource allocation: such as CPU cycles, main memory, and file storage

- Logging: Resource usage statistics

- Protection and Security: Protection involves ensuring that all access to system resources is controlled.

- Command Interpreters:

- Usually the OS treat the command interpreter as a special program that is running when a process is initiated or when a user first logs on

- Usually the OS implements most commands through system programs. This means it uses the command to identify a file/program to be loaded into memory and executed.

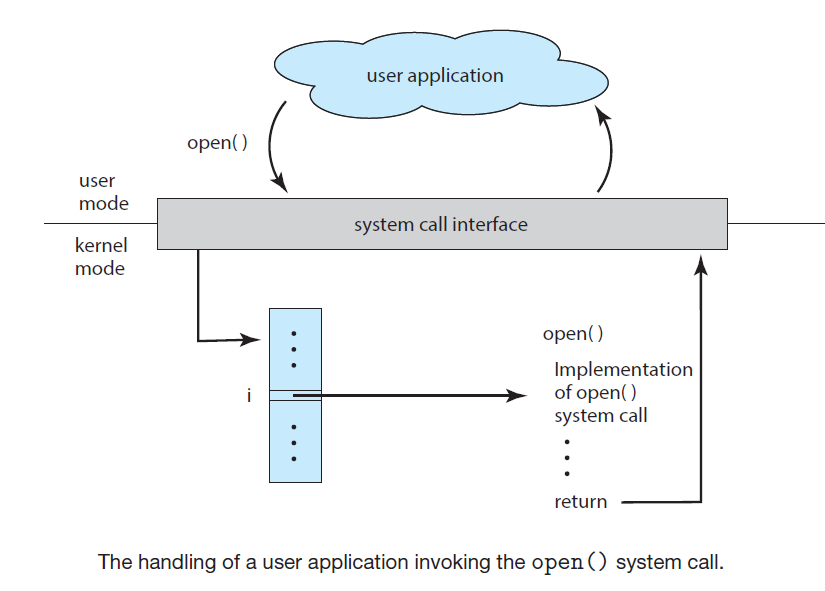

System Calls

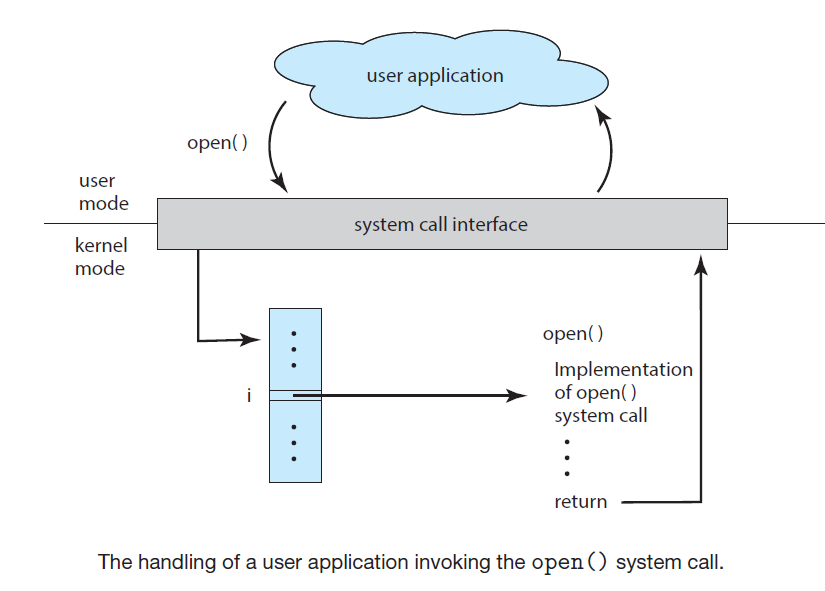

System calls provide an interface to the available OS services

Even simple programs may make heavy use of the operating system adn system calls but this is usually abstracted through an API, in the case of Linux programs written in C the API for this is called libc

Functions that make up an API typically invoke the actual system calls on behalf of the application programmer

The run-time environment (RTE) refers to the full suite of software needed to execute applications written in a given programming language, including its compilers or interpreters as well as other software, such as libraries and loaders.

- Usually The RTE provides a system-call interface that serves as the link to system calls. The system-call interface intercepts function calls in the API and invokes the necessary system calls within the operating system kernel and returns the status of the system call

The view of the operating system seen by most users is defined by the application programs and system programs, rather than by the actual system calls

System Calls Categories

| System Call Category |

Funcionality/Operations |

Windows Examples |

Unix Examples |

| Process control |

- load() and execute() programs

- create_process() and terminate_process()

- set/get_pocess attributes()

- wait for some time wait_time() or wait_event()/signal_event()

- Sync to access resources acquire_lock()/release_lock()

- Memory allocation malloc()/free |

CreateProcess()

ExitProcess()

WaitForSingleObject() |

fork()

exit()

wait() |

| File management |

- create() and delete() files

- file manipulation open(), read(), write(), resposition(), close(), move(), copy(), etc.

- set/get_file attributes() |

CreateFile()

ReadFile()

WriteFile()

CloseHandle() |

open()

read()

write()

close() |

| Device management |

- Allocate and release devices request()/open() and release()/close()

- Device operations read(), write(), specific operations ioctl(), etc.

- set/get_device attributes()

- attach/detach devices |

SetConsoleMode()

ReadConsole()

WriteConsole() |

ioctl()

read()

write() |

| Information maintenance |

- Operations related to the get system information: time(), date(), dump() file/process/system attributes and data in general |

GetCurrentProcessID() , SetTimer()

Sleep() |

getpid()

alarm()

sleep() |

| Communications |

- Communications between processes using a:

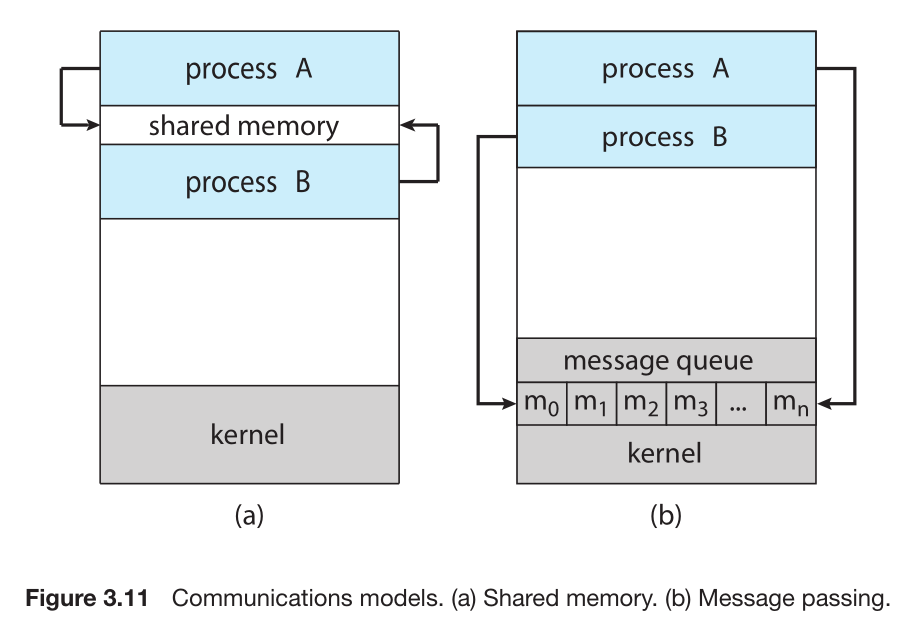

- message passing model: Exchanges messages using a common mailbox first creating a communication connection to send, receive messages and transfer status information, finally closing the connection when done

- shared-memory model: uses operations like shared_memory_create() and shared_memory_attach() to gain access to regions of memory owned by other processes and exchange data in a fast way (process are responsible of sync and dealing with simultaneous writes to the same region) |

CreatePipe()

CreateFileMapping()

MapViewOfFile() |

pipe()

shm_open()

mmap() |

| Protection |

- Controlling access to the resources provided by a computer system through setting permissions (set_permission() and get_permission()) anc controlling users accesses (allow_ user() and deny_user()) |

SetFileSecurity()

InitlializeSecurityDescriptor()

SetSecurityDescriptorGroup() |

chmod()

umask()

chown() |

The similarity between I/O devices and files is so great that many operating systems,merge the two into a combined file-device structure.

The user interface can also make files and devices appear to be similar, even though the underlying system calls are dissimilar.

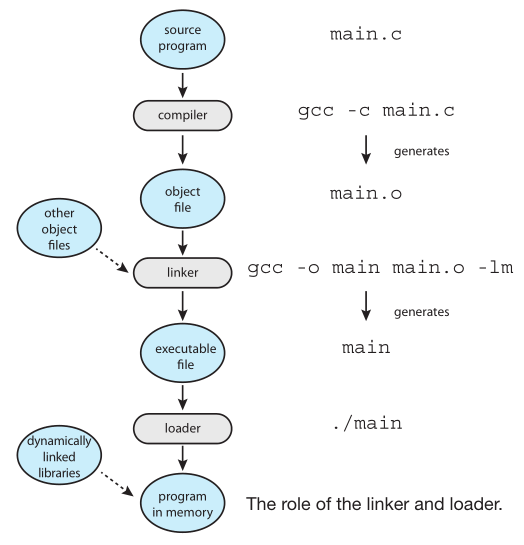

Linkers and Loaders

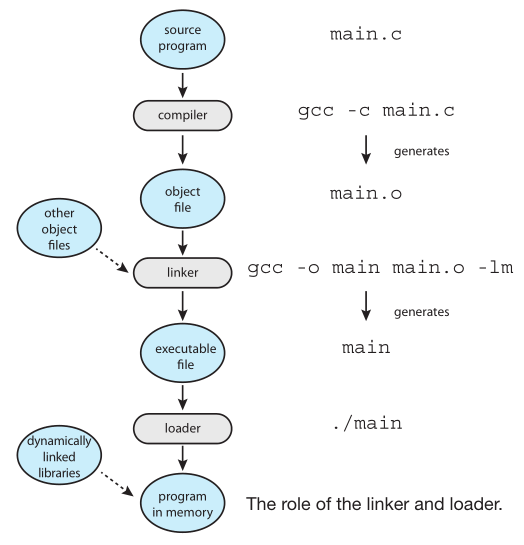

Usually, a program resides on disk as a binary executable file to run, the program must be brought into

memory and placed in the context of a process

Link and load general process:

- Source files are compiled into object files that are designed to be loaded

into any physical memory location (a format known as an relocatable object fil)

- The linker combines these relocatable object files into a single binary executable file

durin this phase other object files or libraries may be included as well

- A loader is used to load the binary executable file into memory, relocation

(which assigns final addresses to the program parts and adjusts code and data in the program)

may occur implicitly when running the program. For example in UNIX systems when doing something like

./main in the terminal:

- The shell first creates a new process to run the program using the

fork() system call

- The shell then invokes the loader with the

exec() system call with the name of the executable file

- The loader loads the specified program into memory using the address space of the newly created process.

Object files and executable files typically have standard formats that include the compiled machine code

and a symbol table containing metadata about functions and variables that are referenced in the program

(this table is needed for dynamic/shared libraries usage)

Linux systems use the ELF (Executable and Linkable Format) format for Object files and executables.

- The

file command can be used to know if a file is an ELF relocatable file or a an ELF executable

- ELF files are divided into a number of sections and can be evaluated using the

readelf

- There are separate ELF formats for relocatable and executable files to include different information, for example one piece of information in the ELF file for executable files is

the program's entry point, which contains the address of the first instruction to be executed

- Although ELF provides a common standard across Linux and UNIX systems, the ELF format is not tied to any specific computer architecture, so it does not guarantee that an executable file will run across different hardware platforms.

For dynamic/shared libraries the linker inserts relocation information that allows it to be dynamically

linked and loaded as the program is loaded

Fundamentally, applications are OS specific because:

- Each OS provides a unique set of system calls for use by applications

- Each operating system has a binary format for applications so the OS can open the file and load the application for proper execution.

- CPU instructioun sets and computer architectures

Applicationss can be made available to run on multipleo Operating system if:

- Using and interpreted language like Python or Ruby or interpreted like language like JAVA that actually uses a virtual machine and an enviroment within it can run applications

- Using a standard API (like

POSIX) to mantain source code compatibility between OSs and then the compiler generates binaries in a machine- and operating-system-specific language

APIs like POSIX specify certain functions at the application level.

At the architecture level, an Application Binary Interface(ABI), which is specified for a given architecture,

is used to define how different components of binary code can interface for a given operating

system on a given architecture. An ABI specifies low-level details, including

address width, methods of passing parameters to functions and system calls,the organization

of the run-time stack, the binary format of system libraries, the size of data types, etc.

If a binary executable file has been compiled and linked according to a particular ABI,

it should run on another system that supports that ABI however ABIs are usually OS and architecture specific

Operating-System Structures

Mechanisms determine how to do something; policies determine what will be done. E.g. The standard Linux kernel has a specific

CPU scheduling algorithm, which is a mechanism that supports a certain policy. However, anyone is free to modify or replace the scheduler to support a different policy.

Although operating systems are large, only a small amount of the code is critical to high performance; the interrupt handlers, I/O manager, memory manager, and CPU scheduler are probably the most critical routines

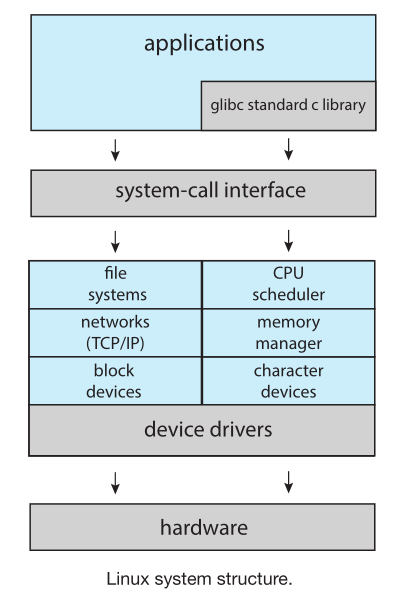

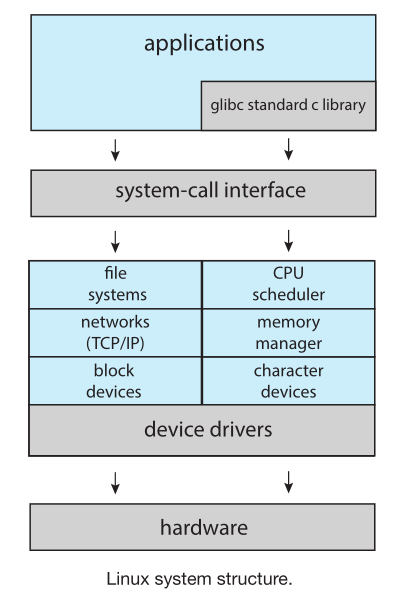

A monolithic OS structure places all of the functionality of the kernel into a single, static binary file that runs in a single address space

- The original UNIX kernel provides the file system, CPU scheduling, memory management, and other operating- system functions through system calls

- Applications typically use the

glibc standard C library when communicating with the system call interface to the kernel.

- The Linux kernel is monolithic in that it runs entirely in kernel mode in a single address space but it has a modular design that

allows the kernel to be modified during run time

One advatange of monolithickernels is that there is very little overhead in the system-call interface and communication within the kernel is fast

A monolithic structure is usually considered a tightly coupled system because changes to one part of the system can have wide-ranging effects on other

unlike a loosely coupled system where changes in one component affect only that component, and no others

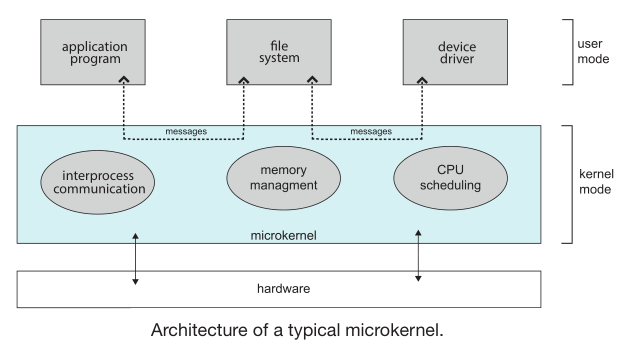

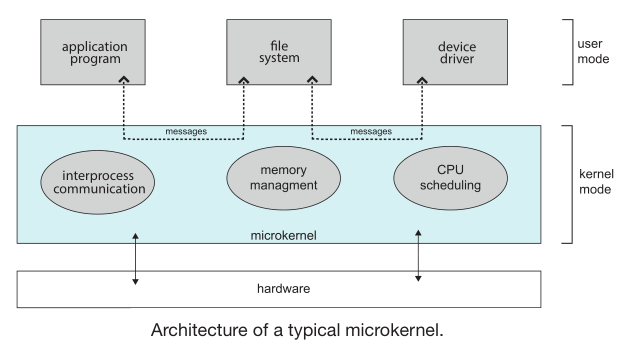

- A microkernel OS approach structures the operating system by removing all non-essential components from the kernel and implementing

them as user-level programs that reside in separate address spaces.

- The main function of the microkernel is to provide communication between application programs and the various services that are also running in user space

- microkernel approach makes it easier to expand the OS and makes it more secure and reliable since most services are running as user processes7

- microkernels tend to suffer from system-function overhead becasue when two user-level services must communicate, messages must be copied between the services, which reside in separate address spaces

- Darwin, the kernel component of the mac OS and iOS operating systems uses a microkernel approach

- In a loadable kernel modules (LKMs) the kernel has a set of core components and can link in additional services via modules, either at boot time or during run time

- In this approach the kernel provide core services, while other services are implemented dynamically, as the kernel is running.

- Linking services dynamically is preferable to adding new features directly to the kernel, which would require recompiling the kernel every time a change was made

- Linux uses loadable kernel modules, primarily for supporting device drivers and file systems.

Build and Boot

Linux General build process

- Download the Linux source code from http://www.kernel.org

- Configure the kernel using the

make menuconfig command. This step generates the .config configuration file.

- Compile the main kernel using the Makefiles and

make utility to produce the vmlinuz file, which is the kernel image (depends on the .config parameters)

- Compile the kernel modules using the

make modules (depends on the .config parameters)

- Use

make modules install too install the kernel modules into vmlinuz

- Install new kernel in the system with

make install

- Reboot to new kernel

Linux kernel image is a compressed file that is extracted after it is loaded into memory

Booting

The process of starting a computer by loading the kernel is known as booting the system

General boot process

- The bootstrap program or boot loader locates the kernel

- The kernel is loaded into memory and started

- The kernel initializes hardware

- The root file system is mounted

Boot loader options

- BIOS which is stored in non-volatile firmware and considered the first bootloader is run, then loads a second bootloader whic is located at the Boot Block

which is stored in at a fixed disk location this either loads the entire OS or usually just knows the address on disk and the length of the remainder of the bootstrap program

- UEFI is another boot option that has a complete boot manager and better support fo r64-bits systems and larger disks

Regarding of the boot option the in addition of loading the kernel the first stage bootstrap program usually runs diagnostics on HW, inspects memory and the CPU, discovers devices, etc.

In general it can initialize all aspects of the system, from CPU registers to device controllers and the contents of main memory

GRUB is an open-source bootstrap program for Linux and UNIX systems. Boot parameters for the system are set in a GRUB configuration

file, which is loaded at startup, it allows modifying kernel parameters and even selecting among different kernels that can be booted

During the boot process, the boot loader typically creates a temporary RAM file system, known as initramfs.

This file system contains necessary drivers and kernel modules that must be installed to support the real root file system

(which is not in main memory). Once the kernel has started and the necessary drivers are installed, the kernel switches the

root file system from the temporary RAM location to the appropriate root file system location. Finally, Linux creates the systemd

process, the initial process in the system, and then starts other services (for example, a web server and/or database)

The booting mechanism is not independent from the boot loader. Therefore, there are specific versions of the GRUB boot loader for

BIOS and UEFI, and the firmware must know as well which specific bootloader is to be used

core dump = memory dump because Memory was referred to as "core" in the early days of computing

- BCC (BPF Compiler Collection) is a rich toolkit that provides tracing and debugging features for Linux systems

Processes

A process is a program in execution

Systems consist of a collection of processes, some executing user code, others executing operating system code.

The status of the current activity of a process, in optehr words the status/state of a process is represented by the value

of the program counter and the contents of the processor's registers

A process is an active entity, with a program counter specifying the next instruction to execute and a set of associated resources.

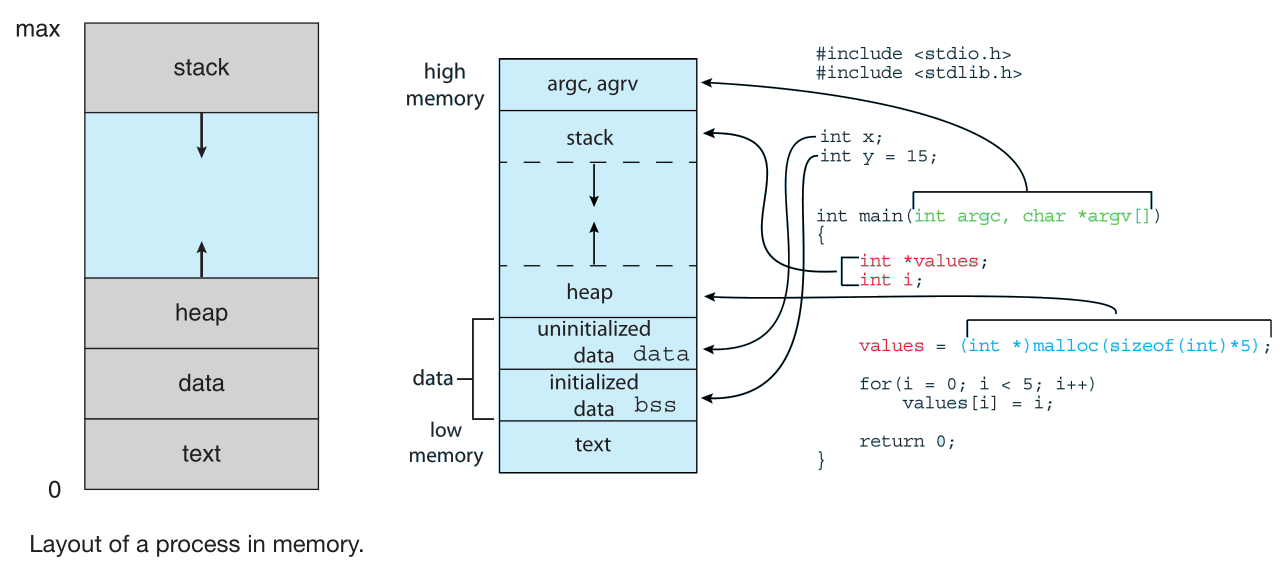

A program becomes a process when an executable file is loaded into memory.

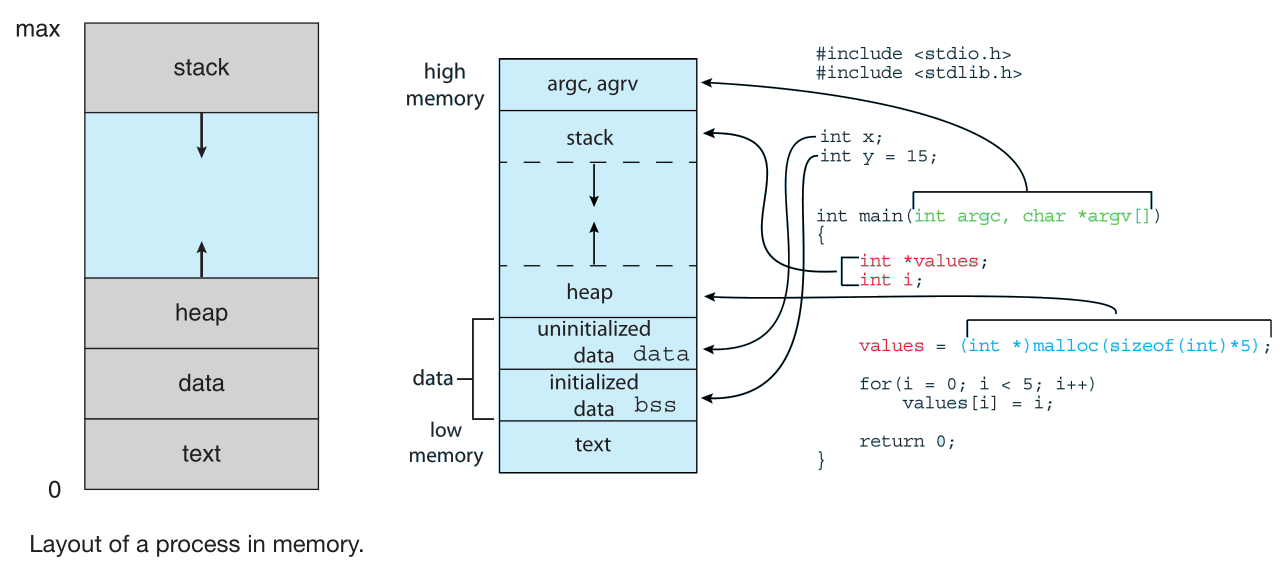

The memory layout of a process is typically divided into multiple sections:

text = code: The executable codedata = global variables: Global variables and other static data related to the processheap = dynamic memory: memory that is dynamically allocated during program run timestack = stack memory: For temporary data storage when invoking functions (such as function parameters, return addresses, and local variables)

text and data sizes are fixed, as their sizes do not change during program runtime while heap and stack can change

(shrink and grow dynamically) and in general grow toward one another, the OS must ensure they do not overlap one another.

You can use the size <executable_name> command to determine the size (in bytes) of a process sections

- Although two processes may be associated with the same program for example several users may be running different copies of the mail program,

each of these is a separate process although the text sections are equivalent, the the data, heap, and stack section vary. It is also possible

that a process spawns many other processes as it runs

a process can itself be an execution environment for other code. For example the JVM (Java virtual machine) executes as a process

that interprets the loaded Java code and takes actions (via native machine instructions) on behalf of that code.

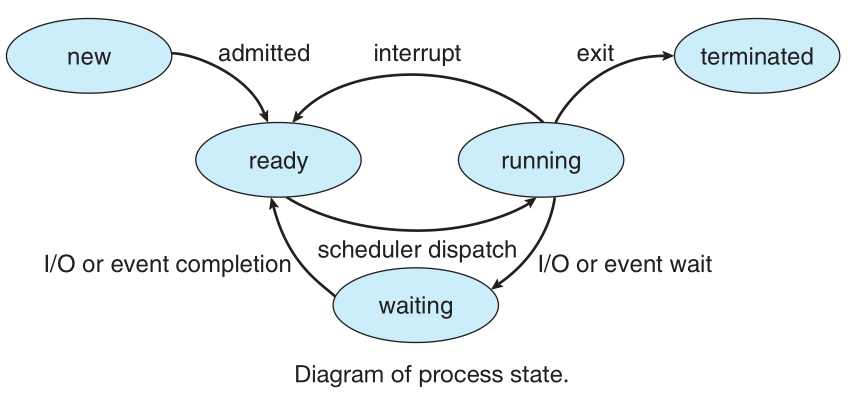

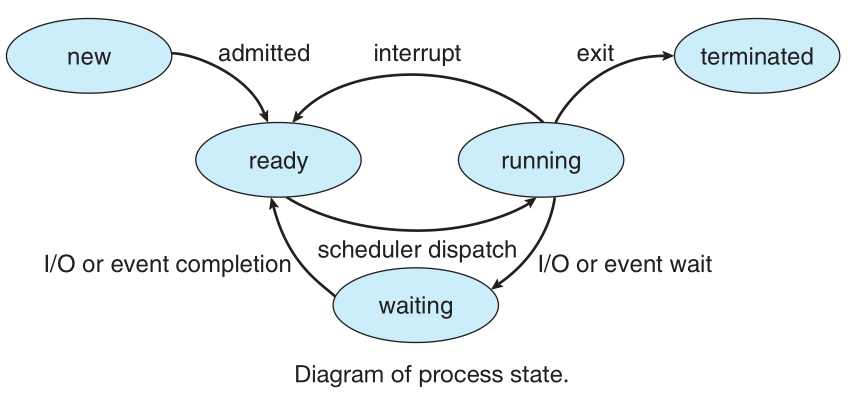

- A process may be in one of the following execution states

- New: The process is being created.

- Running: Instructions are being executed.

- Waiting: The process is waiting for some event to occur (such as an I/O completion or reception of a signal).

- Ready: The process is waiting to be assigned to a processor.

- Terminated.: The process has finished execution.

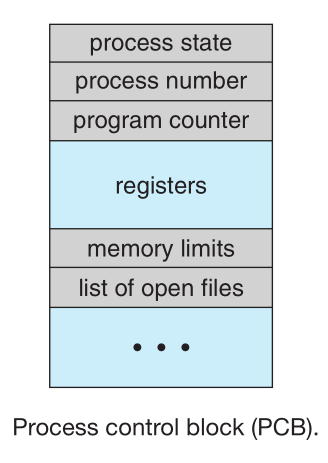

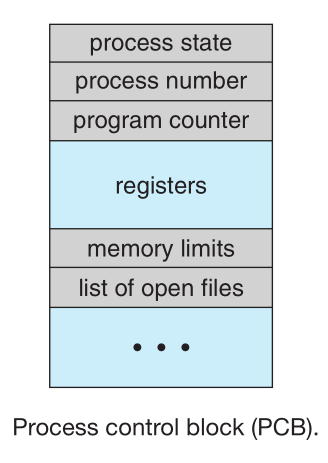

- Each process is represented in the operating system by a Process Control Block (PCB) — also called a task control block on some OSs

which contains many pieces of information associated with a specific process

- Process state: Execution state of the process (can be new, ready, running, etc.)

- Program Counter: Address of the next instruction to be executed for this process, NOT updated every instruction, needs to be updated only when the process is interrupted

- CPU registers saved when process is interrupted, to allow the process to be continued correctly afterward when it is rescheduled to run.

- CPU-scheduling information: Includes information about a process priority, pointers to scheduling queues, and any other scheduling parameters.

- Memory-management information Includes information of the base and limit registers and the page tables, or the segment tables, depending on the memory system used by the OS

- Accounting information: Includes information about the amount of CPU and real time used, time limits, stats, etc.

- I/O status information: This information includes the list of I/O devices allocated to the process, a list of open files, etc.

In general the PCB serves as the repository for all the data needed to start or restart a process

A process can run multiple threads On systems that support threads, the PCB is expanded to include information for each thread.

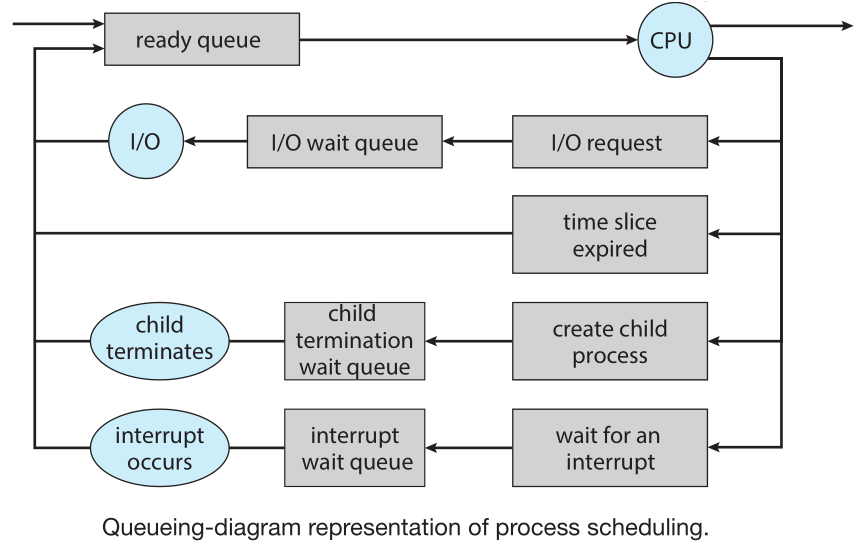

The objective of multiprogramming is to have some process running at all times so as to maximize CPU utilization.

Time sharing the CPU refers to switching a CPU core among processes so frequently that users can interact with each program while it is running

A PCB on Linux is represented by the struct task struct found in the <include/linux/sched.h> which contains all the necessary information for

representing a process

An I/O-bound process is one that spends more of its time doing I/O while a CPU-bound process, in contrast, generates I/O requests infrequently, using more of its time doing computations.

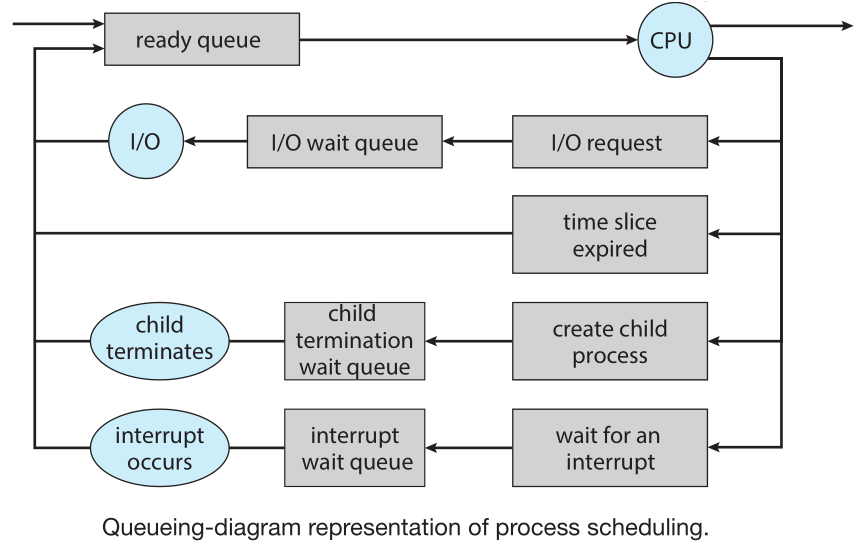

A new process is initially put in the ready queue. It waits there until it is selected for execution, or dispatched.

Once the process is allocated a CPU core and is executing it can go into a wait state (due to IO request, expire time slice, create a new child process and wait for it, etc) or terminate

The role of the CPU scheduler is to select from among the processes that are in the ready queue and allocate a CPU core to one of them,

other events can also cause the operating system to change a CPU core from its current task and to run a kernel routine

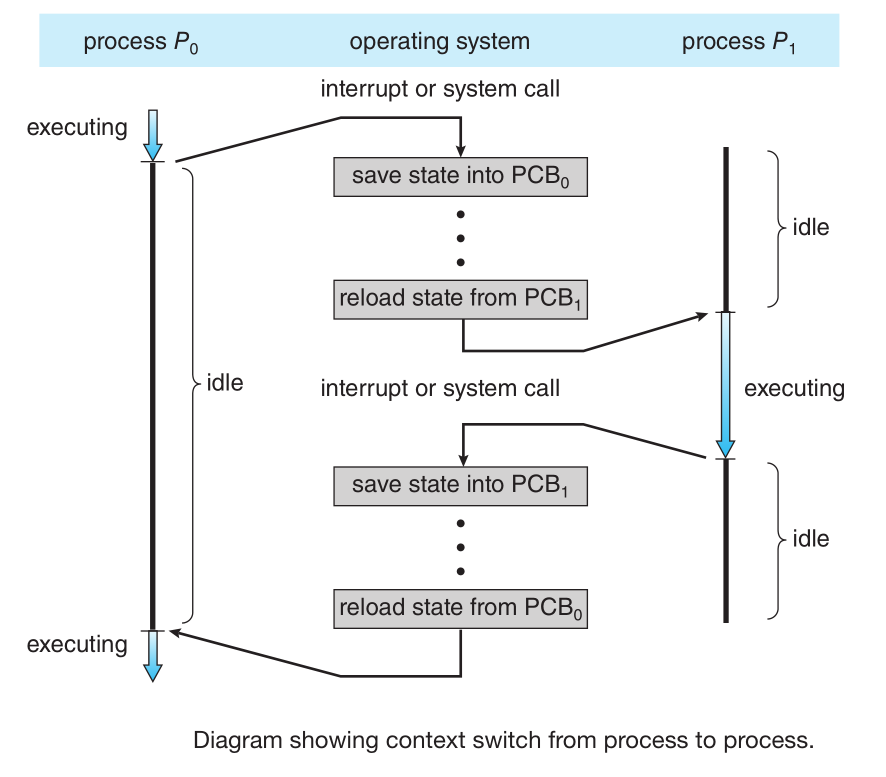

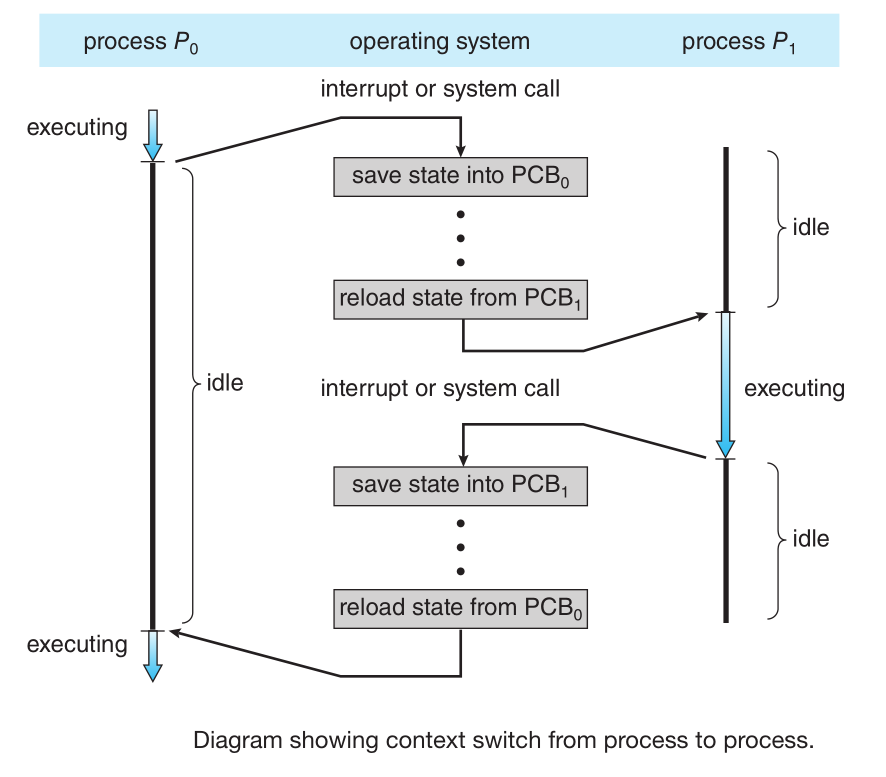

- context switch Refers to switching the CPU core to another process, this requires performing a state save of the current process

and a state restore of a different process (be it to switch to a kernel process or just to another user process), the kernel of the OS

saves the context of the old process in its PCB and loads the saved context of the new process scheduled to run

Processes Operations

A process (parent process) may create several new processes (child processes)

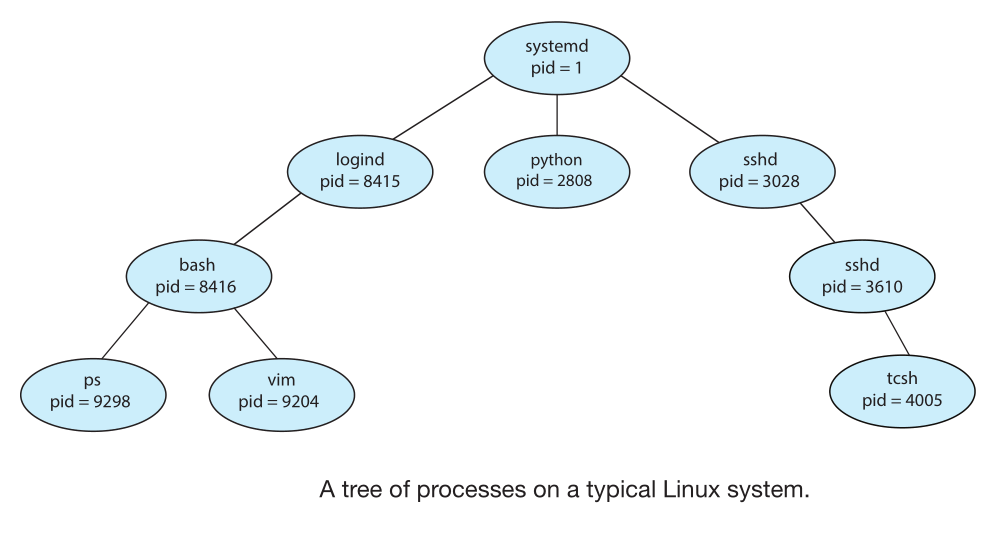

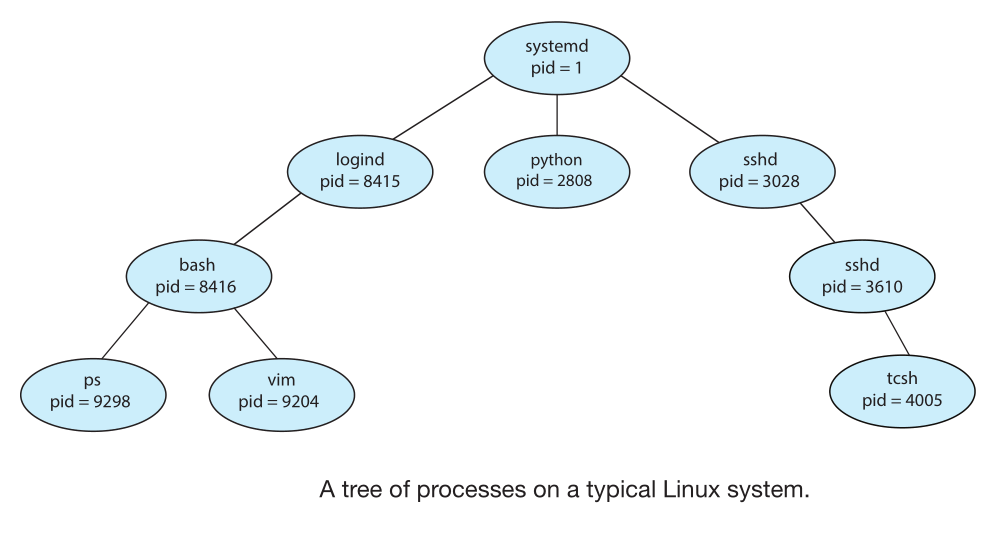

In linux the systemd process (which always has a pid of 1) serves as the root parent process for all user processes,

and is the first user process created when the system boots. once booted the systemd process creates processes which

provide additional services such as a web or print server, an ssh server, logind (manage users login), etc

You can use the command ps -el to list complete information for all processes currently active or pstree to show a tree of all the processes in the system

When a process creates a new process, two possibilities for execution exist:

- The parent continues to execute concurrently with its children

- The parent waits until some or all of its children have terminated

There are also two address-space possibilities for the new process:

- The child process is a duplicate of the parent process (it has the same program and data as the parent).

- The child process has a new program loaded into it.

A new process is created by the fork() system call. The new process consists of a copy of the address space of the original process.

Both processes (the parent and the child) continue execution at the instruction after the fork() but the return code for the fork()

is zero for the new (child) process, whereas the (nonzero) process identifier of the child is returned to the parent.

After a fork() system call, one of the two processes typically uses the exec() system call to replace the process’s memory space with a new program.

The exec() system call loads a binary file into memory (destroying the memory image of the program containing the exec() system call) and starts its execution

The call to exec() overlays the process's address space with a new program, so it does not return control unless an error occurs

A process terminates when it finishes executing its final statement and asks the operating system to delete it by using the exit() system call,

it can return a status value to its waiting parent process (via the wait() system call which besides status returns process identifier of the terminated child).

under normal termination, exit() will be called either directly or indirectly, as the C run-time library (which is added to UNIX executable files)

will include a call to exit() by default.

when a child process finishes before the parent, the child will become a zombie process, if this happens and then the parent finishes it leaves child processes as orphans

Interprocess Communication (IPC)

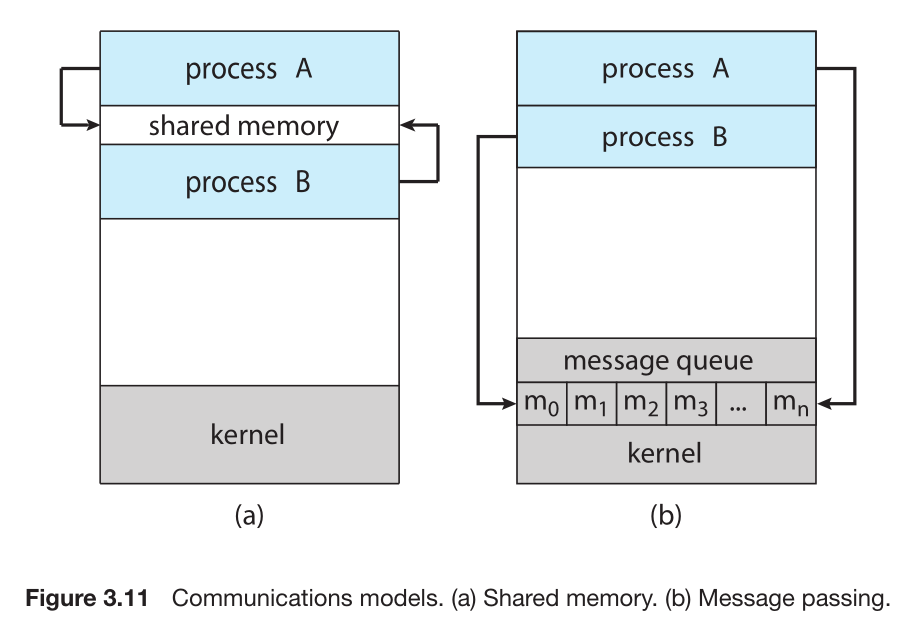

Interprocess Communication (IPC) refers to the mechanism that will allow processes to exchange data (send data to and receive data from each other)

Types/Models of interprocess communication: shared memory and message passing

- shared memory: a region of memory that is shared by the cooperating processes is established

- message passing: communication takes place by means of messages exchanged between

Threads and Concurrency

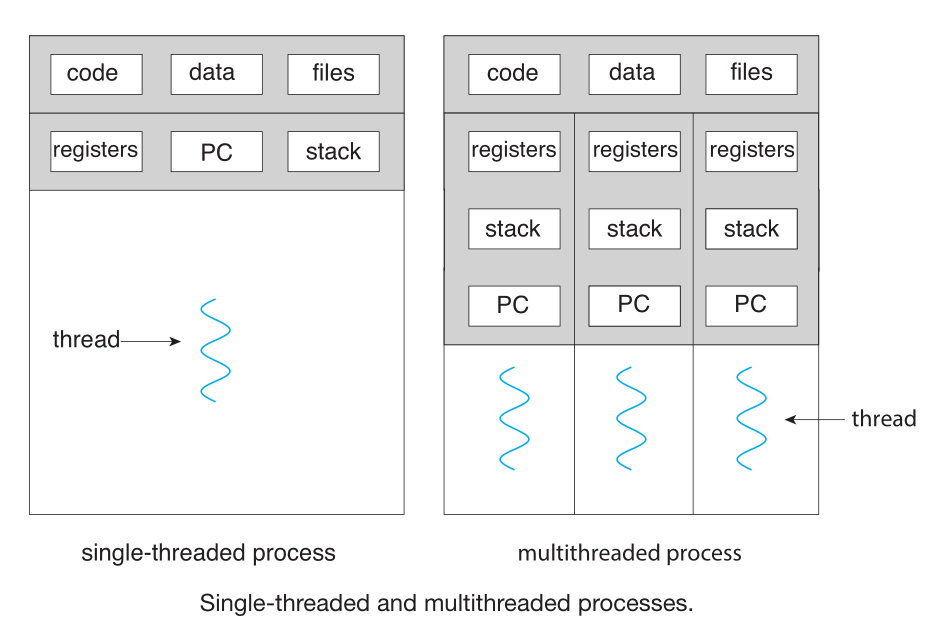

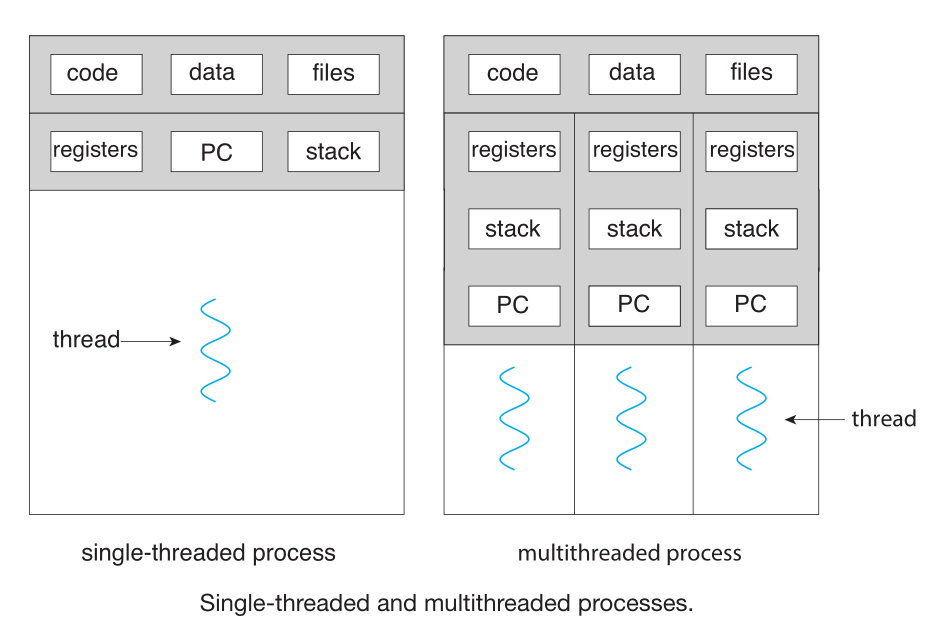

A thread is a basic unit of CPU utilization; it comprises a thread ID , a program counter (PC), a register set, and a stack.

It shares with other threads belonging to the same process its code section, data section, and other operating-system resources, such as open files and signals

A traditional process has a singlethread of control

Most operating system kernels are also typically multithreaded. For example during system boot time on Linux systems, several

kernel threads are created. Each thread performs a specific task, such as managing devices,memory management, or interrupt handling

In Linux use the command ps -ef and check kthreadd (with pid = 2) which serves as the parent of all other kernel threads

Multithreading programming is used to improve

- Responnsives: other parts of the program can run while its blocked/waiting for another operation

- Easier Resources sharing due to threads share code and data

- Resources economy: context switch is faster between threads than between processes

A concurrent system supports more than one task by allowing all the tasks

to make progress. In contrast, a parallel system can perform more than one task

simultaneously.

The maximum speedup we can get from an application using a multithreaded approach is bounded by the percentage of the appplication that must be performed serially (S), thi is

Amdahl's law = 1/S. For example if 50% of an app must be performed serially the maximum speedup we will get by adding more cores and threads is 1/0.50=2.0

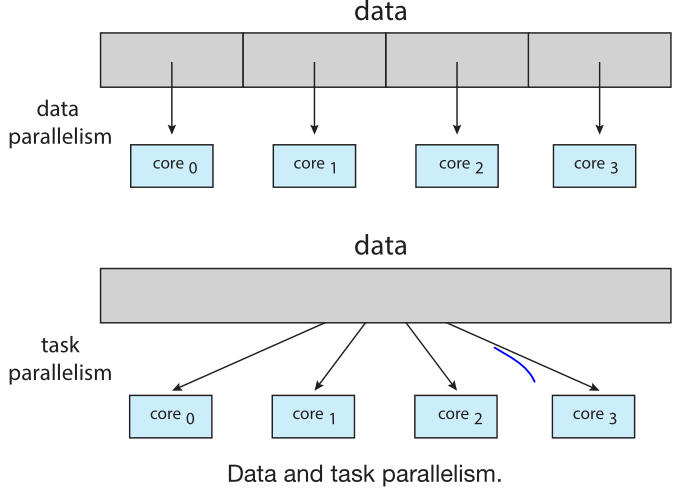

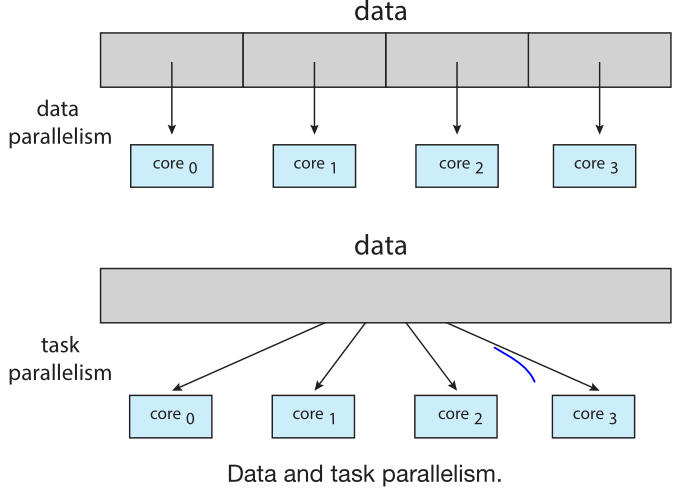

Data parallelism focuses on distributing subsets of the same data across multiple computing cores and performing the same operation on each core. In general Data parallelism = Divide data

Task parallelism involves distributing threads across multiple computing cores where each thread is performing a unique operation.

Different threads may be operating on the same or different data.

Support for threads may be provided either at the user level, for user threads, or by the kernel, for kernel threads which

are supported and managed directly by the operating system, ultimately, a relationship must exist between user threads and kernel threads since

to run on a CPU , user-level threads must ultimately be mapped to an associated kernel-level thread

Most current OS use the one-to-one model to map user-level threads to kernel level thread in this model each user thread is mapped to a kernel thread,

creating a user thread requires creating the corresponding kernel thread, Linux and Windows use this mnodel

Other mapping models like many-to-one and many-to-many relly on using lightweight processes (LWP) which can be considered virtual CPUs managed my the kernel,

the OS schedules the LWPs kernel threads onto a physical CPU core

A thread library provides the programmer with an API for creating and managing threads

- A user space thread library means all code and data structures for the library exist in user space and

invoking a function in the library results in a local function call in user space and not a system call.

- A kernel space thread library measn at least some code and data structures for the library exist in kernel space. Invoking a function in the API for the library typically results in a system call to the kernel.

Pthreads refers to the POSIX standard (IEEE 1003.1c) defining an API for thread creation and synchronization which is a specification not an implementation.

Signals

A signal is used in UNIX systems to notify a process that a particular event has occurred and 'signals' a particularr event,

once delivered it must be handled

synchronous signals are delivered to the same process that performed the operation that caused the signal for example a diviusion by zero or an illegal memory access

When a signal is generated by an event external to a running process, that process receives an asynchronous signal for example terminating a process with ctrl+c

A signal may be handled by

- default signal handler: every signal has one default handler hat the kernel runs when handling that signal (this could result on ignoring the signal or other action like terminating a process in the case of illegal memory access)

- user-defined signal handler: it can override the default handler

Delivering signals in a multithreades process can results in: deliver only to thread which the signal applies, deliver to all threas, deliver to certain thread, assign a thread to handle all signals

we use the kill(pid t pid, int signal) to deliver a signal

Linux does not distinguish between processes and threads. In fact, Linux uses the term task rather than process or thread when referring to a flow of control within a program.

- A unique kernel data structure (

task_struct) exists for each task in the system

- Holds pointers to data structures that represent the list of open files, signal-handling information, and virtual memory

fork() and clone() a new task_struct is created but with clone() instead of copying all data structures pointed by the original only some are copied

depending on the set of flags passed to clone()

CPU Scheduling

It is kernel-level threads not processes that are in fact being scheduled by the operating system however the terms "process scheduling" and "thread scheduling" are used interchangeably

multiprogramming refers to the idea of having some process running at all times, to maximize CPU utilization so a process

is executed until it must wait or until a certain time has passed then the operating system takes the CPU away from that process and

gives the CPU to another process.

A scheduler cane be preemptive if it interrupts processes to give CPU time to other processes or non-preemptive if it always waits

for process relinquish the CPU voluntarily (due to an IO wait for example) or if it waits for processes to finish

the scheduler usually refers only to the code/algorithm that selects the next process to run while the dispatcher refers

to the code that gives control of the CPU to a process which involves context switch, jum to the proper location in the user code to resume the program

and gracefully switching to user mode (considering the code is comming from kernel mode where context switch is usually done)

In scheduling metrics we want to maximize CPU utilization and throughput and minimize turnaround time, waiting time, and response time:

- CPU utilization percentage of CPU usage

- Throughput: number of processes that are completed per time unit (

(total completed processes) / (total time to complete processes))

- Turnaround time: The interval from the time of submission of a process to the time of its completion

time process ends - time process was submitted

- Waiting time: amount of time waiting while being ready to execute (not including time waiting for IO or other wait time)

- Response time: The time from the submission of a request until the first response is produced, in other words the time it

takes to start responding, not the time it takes to output the response.

process-contention scope (PCS) refers to the competition for the CPU that takes place among threads belonging to the same

process or in other words user level threads competing for the CPU through a LWP (virtual CPU), which occcur on many-to-many model

system-contention scope (SCS) refers to the competition for the CPU that takes place amig kernel level threads this occurs in the one-to-one model

since each user-level thread is mapped to a kernel level thread

The standard approach for supporting multiprocessors is symmetric multiprocessing (SMP), where each processor is self-scheduling,

this means having a scheduler for each processor which examines the ready queue and select a thread to run (usually each processor has each own private queue)

A memory stall refers to the waiting a processor has to do to get some data, this can occur due to cache miss or simple access time

OS attempt to keep a thread running on the same processor and take advantage of a warm cache. This is known as processor affinity

Linux for example supports hard affinity by allowing a thread tospecify the set of CPUs on which it is eligible to run (using the sched setaffinity() system call)

Memory Management

Main memory and the registers built into each processing core are the only general-purpose storage that the CPU can access directly. There are machine

instructions that take memory addresses as arguments, but none that take "disk addresses". If data is not in memory, it must be moved there before the CPU can operate on it.

main memory, is accessed via a transaction on the memory bus that the reason a memory access can take more than one clock cycle, in that case

the CPU usually needs to stall since it does not have data required to complete the instruction

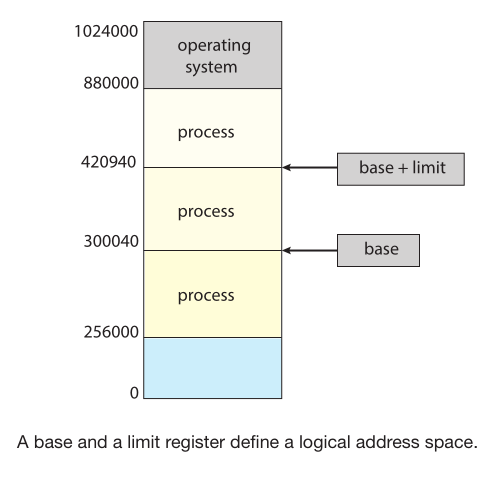

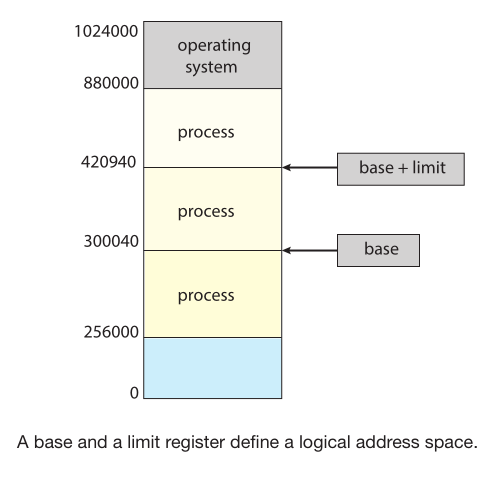

Memory is divided to make sure that the OS and each process has a separate memory space to do this we need HW support for example a

base register (a.k.a relocation register) which holds the smallest legal physical memory address and a limit register that specifies the size of the range

with this we can create a protection by making the HW compare addresses generated in user mode with the registers and trap invalid accesses.

These registers can only be modified by the OS which runs in a privileged mode with a privileged instruction

Addresses in a source program are generally symbolic, a compiler binds these symbolic addresses to relocatable addresses, then the

The linker or loader binds the relocatable addresses to absolute addresses

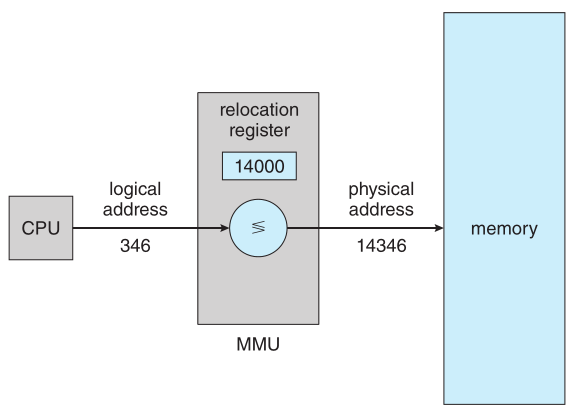

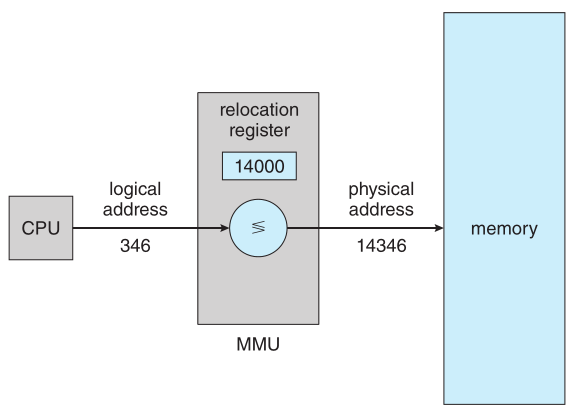

CPU generates logical addresses which come from the code which at run-time are mapped to physical addresses this is done by a

hardware device called the memory-management unit (MMU)

One of the steps of the mapping involves the in the base/relocation register which is added to every address generated by a user

process at the time the address is sent to memory

The user program generates only logical addresses and thinks that the process runs in memory locations from 0 to a max address.

In reality these are mapped to physical addreses involving the base/relocation register

Whena program references a routine that is in a dynamic library, the loader locates the Dynamically linked library (DLL) or shared Library,

loading it into memory if necessary. It then adjusts addresses that reference functions in the library to the location in memory where

the library is stored. version information must be included in both the program and the library to reference the appropiate library code since

more than one version of a library may be loaded into memory, and each program uses its version information to decide which copy of the library to use.

modern systems place the operating system in high memory

External fragmentation exists when there is enough total memory space to satisfy a request but the available spaces are not contiguous

Internal fragmentation refers to unused memory that is internal to a block fue to division of block size memory units

When we use a paging scheme, we have no external fragmentation but wemay have internal fragmentation

Paging

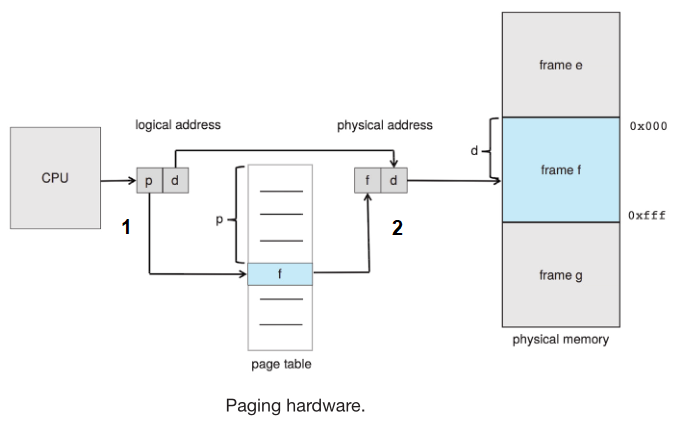

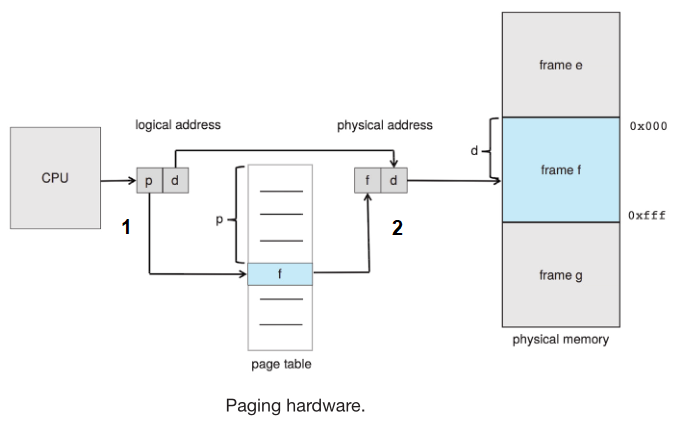

paging is a memory-management scheme that permits a process's physical address space to be non-contiguous in physical memory

With paging physical memory is broken into fixed-sized blocks called frames and logical memory is broken into blocks of the same size called pages

- frame = physical memory block

- page = logical/virtual memory block

When a process is to be executed, its pages are loaded into any available memory frames from their source, which

can be secondary storage which at the same time is usually divided into fixed-sized blocks

page and frame size is defined by the OS but depends on the HW support (HW usually supports from 4KB to 1GB per page/frame)

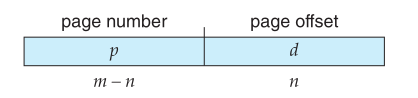

- size of logical address space =

2^m bytes. Where m = number of logical pages

- page/frame size

2^n bytes

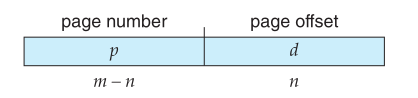

Every address generated by the CPU is divided into two parts

- page number (

p): it refers to the index of a page table that is maintaned per-process

- page offset (

d): offset within the frame in physical memory

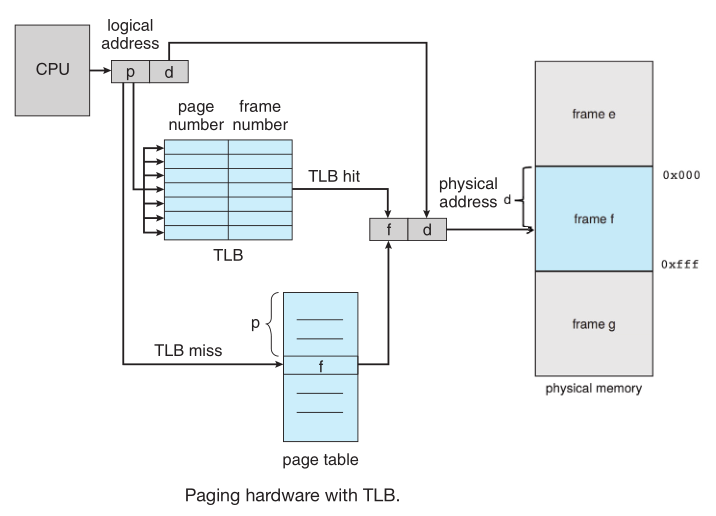

- MMU address translation:

- Use

p to get frame number (f) from page table

- Combine frame number with offset to get actual physical address

New process paging memory allocation process:

- its size, expressed in pages, is examined (if the process requires

n pages, at least n frames must be available in memory)

- if available

n frames are allocated

- The first page of the process is loaded into one of the allocated frames, and the frame number is put in the page table for this process

- Following pages of the process are loaded into frames and frame numbers are put into the page table

The programmer views memory as one single space, containing only this one program. In fact, the user program is scattered

throughout physical memory, which also holds other programs.

A frame table is a data struct maintained by the OS that contains ifnroamtion about state of physical memory for example which frames are allocated,

which frames are available, how many total frames there are)

The frame table has one entry for each physical page frame, indicating whether this frame is free or allocated and, if it is allocated,

to which page of which process (or processes).

The operating system maintains a copy of the page table for each process which is used to define/set the hardware page table, the one used by the MMU,

when a process is to be allocated the CPU to be executed

The page table copy is also used swhenever the operating system must map a logical address to a physical address manually fro example in

IO system call that returns data in a buffer/address

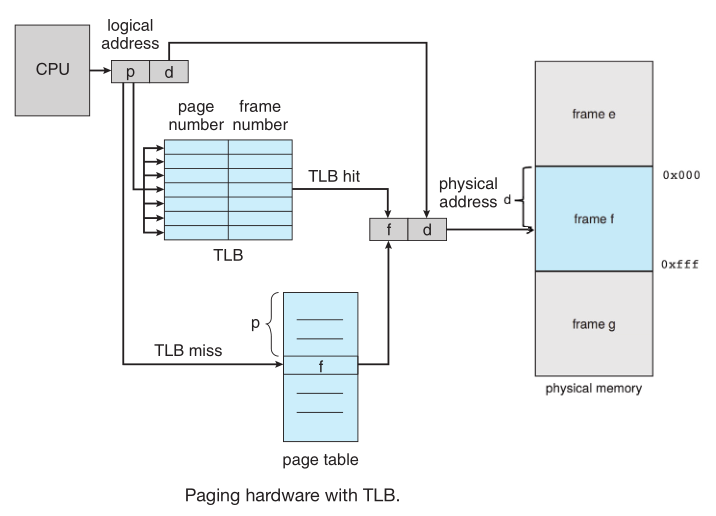

the page tables are kept in main memory, and a page-table base register (PTBR) points to the current page table used by uthe HW MMU.

Changing page tables requires changing only this one register

Since page table is kept in memory to do an access the CPU fisrt need to fecth the frame number from memory using the PTBR to speed this up

the HW can use a translation look-aside buffer (TLB) which has a small lookup table for page table entries (from 32 to 1024 entries), the TLB can contain

address-space identifier (ASIDs) to identify each process if thats not the case then every time a new page table is selected (due to context switch for example),

the TLB must be flushed/erased to ensure that the next executing process does not use the wrong translation information

Memory protection in a paged environment is accomplished by protection bits associated with each frame, these bits are also kept in the page table

A valid-bit is also attached to each entry in the page table to signal if a page is in the process's logical address space, which make it valid for accesses.

The operating system sets this bit for each page to allow or disallow access to the page

To avoid each process to load its own copy of a library pages can be shared if the code is reentrant so only one copy is kept

in memory and the page table for each user process maps onto the same physical copy

Reentrant code is non-self-modifying code: It never changes during execution so two or more processes can execute the same

code at the same time (data section is of course different)

for 64-bit architectures, hierarchical page tables are generally considered inappropriate

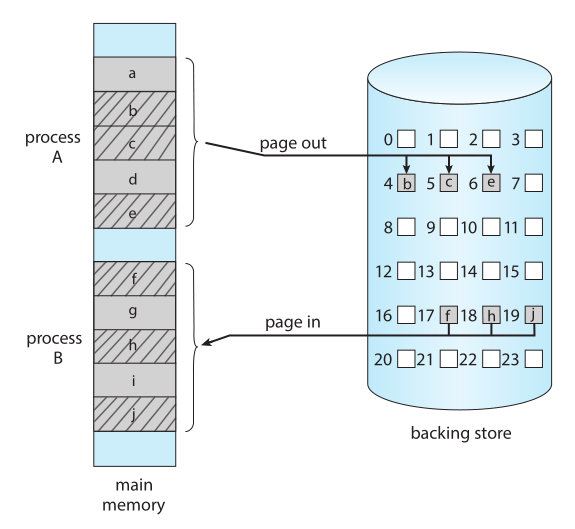

Swapping

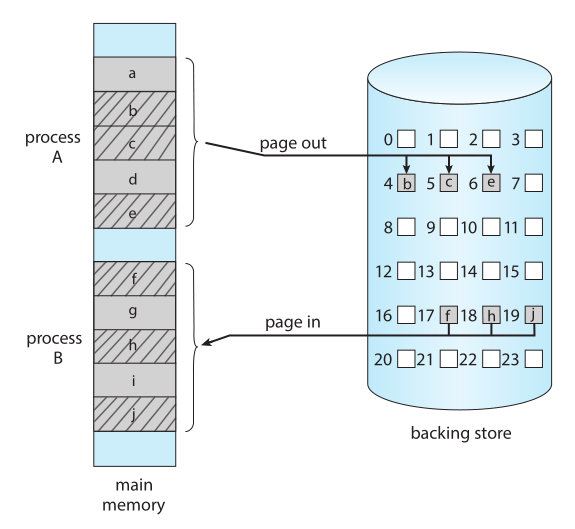

Process instructions and the data they operate on must be in memory to be executed. However, a process, or a portion of a process, can be swapped

temporarily out of memory to secondary storage and then brought back into memory for continued execution

In modern systems a swapping occurs at a page level, a page out operation moves a page from memory to the secondary storage and a page in brings it to memory

- when a system is under going any form of swapping, it is a sign there are more active processes than available physical memory.

Memory Management in Intel

IA-32 architecture

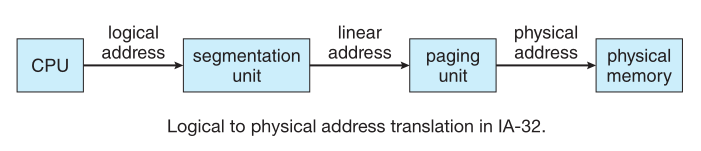

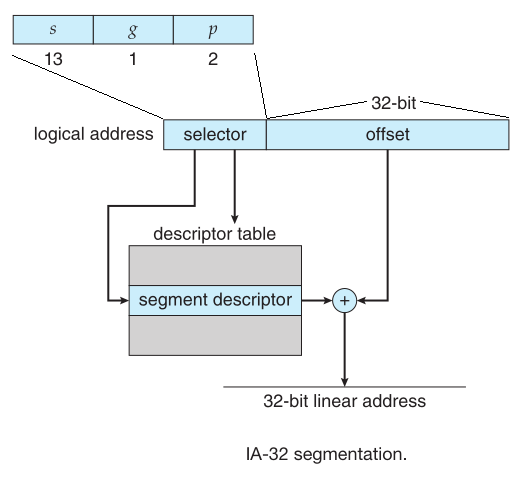

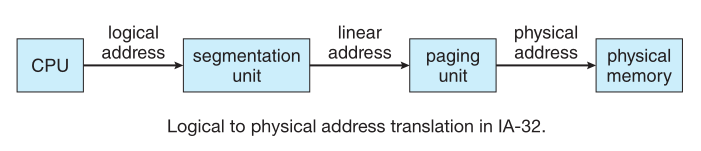

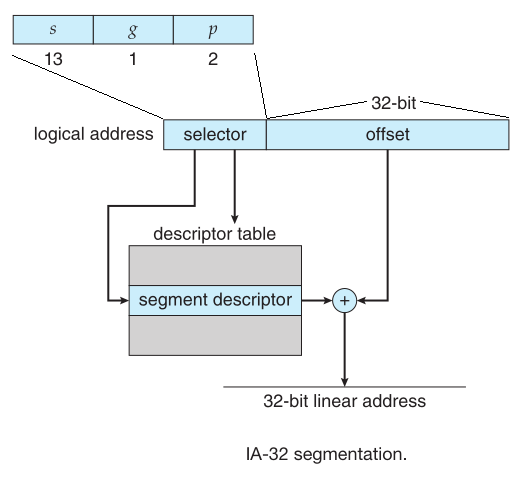

Memory management in IA-32 systems is divided into two components: segmentation and paging

The CPU generates logical addresses, which are given to the segmentation unit, this produces a linear addrees which is the

used by the paging unit to generate the physical address

A Segment can be as large as 4 GB and the maximum number of segments per process is 16384 Segments (16K) these segments are dividied into two partitions

- First partition:

- Up to 8192 segments (8K) that are private to the process.

- Information about this partition is stored in the local descriptor table (LDT)

- Second Partition:

- Up to 8192 segments (8K) that are global segments, which means can be shared between processes.

- about this partition is stored in the global descriptor table (GDT)

Entries on the LDT and the GDT partition tables consists of an 8-bytes egment descriptor with information about a particular segment,

including the base location and limit of that segment.

The machine has six segment registers, allowing six segments to be addressed at any one time by a process and also 8-byte registers to store the LDT or the GDT descriptor for a segment

The logical address is a pair of selector and offset, where:

- selector:

s defines segment numberg signals if segment is global or privatep bits for protection

- ofsset: 32-bit number specifying the location of the byte within the segment

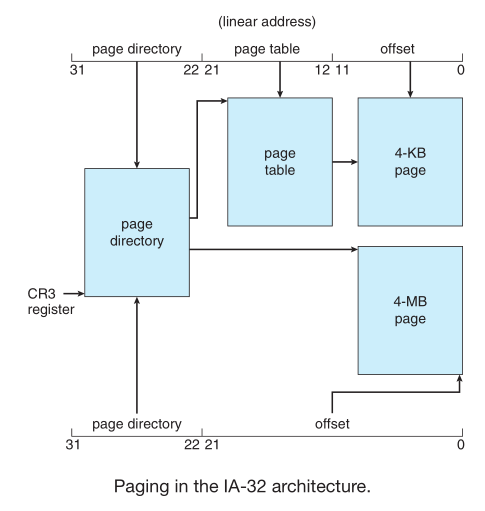

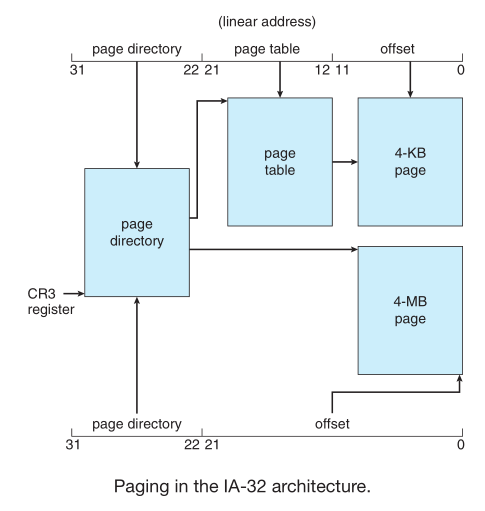

- IA-32 allows page size of either 4KB using two-level paging scheme or 4MB using a single-level

- Page tables can be swapped so one entry of the table signals size and validity of the table

- The

CR3 register points to the page directory for the current process

- To avoid the 4-GB memory limitations of 32-bit architectures Intel implemented page address extension (PAE), which uses a three level paging scheme

- The x86-64 architecture currently provides a 48-bit virtual address with support for page sizes of 4KB, 2MB, or 1GB using four levels of paging hierarchy.

Virtual memory

- Virtual memory is a technique that allows the execution of processes that are not completely in memory so programs can be larger than physical memory.

References

Images and content taken from:

- Operating System Concepts, 10th Edition (2018), Abraham Silberschatz; Peter Baer Galvin; Greg Gagne. Hoboken NJ, USA : Wiley